On Cracking

Some time ago I cracked my first Mac app. Overall it was a nice experience and reminded me of good old times. Here are some comments about it:

- it was the first commercial app (without considering MATLAB which I use for work) that I actually found useful after 1 year of Mac. I think that is good, because it means opensource software still satisfies most of my needs (or is it bad, because it means I am becoming a lazy hipster now?)

- the tutorial by fG! has been precious to me, especially to quickly find the tools of the trade. I suggest it to anyone willing to start reversing on Mac

- I am not half bad, after all: I managed to do that with the trial version of Hopper, so only deadlisting + time limit, and that added some spice to the game (I know everyone is thinking about Swordfish now... but no, there was no bj in the meanwhile ;-))

- cracking is still pure fun, especially when you find that the protection is hidden in functions with names purposely chosen to mislead you (no, I won't tell more details, see below)

- I have immediately bought the app: it was cheaper than going to a cinema and cracking it was more entertaining than the average blockbuster movie, plus I am left with a great program to use... That's what I call a bargain!

I still do not agree with Daniel Jalkut, the developer of MarsEdit: I think he wasted time on a trivial protection to sell some closed-source code he should have shared freely (as in freedom). But don't misunderstand me... Who am I to judge what somebody should or should not do? The only reason why I say this is that MarsEdit is a cool program (which btw I am using right now) and, while it is worth all the money I payed, not being able to see it open sourced is a real pity. But I respect Daniel's thought and I think his work deserved to be supported.

I know not all of you think about it this way, and probably I might have thought about it differently too, years ago. One thing, however, never changed: cracking/reversing is so much more than getting software for free, and if you stop there you are missing most of the fun ;-)

Gopher is dead, long live gopher

Some months ago, while preparing a lesson for my Internet Technology class, I was doing some research on old protocols just to give my students the feeling about how the Internet used to work some years ago, and how it is much, much more than just the Web. During this research I found some interesting information about good old Gopher and how to run it from android devices... Hey, wait, did I say android devices? Yep! The Overbite project aims at bringing gopher to the most recent devices and browsers. As Firefox &co. from their latest versions have stopped supporting Gopher (shame on you), guys from the Overbite project have also decided to develop a browser extension to let you continue browsing it (well, if you ever did it before ;)).

What struck my mind is not the piece of news per se, but the fact that there was a community (I thought a very small one) that was still interested in letting people access the gopherspace... To find what? So I spent some time (probably not enough, but still more than I planned) browsing it myself and checking what is available right now...

What I found is that the gopherspace, or at least the small part of it I was able to read in that limited time, is surprisingly up-to-date: there are news feeds, weather forecasts, reddit, xkcd, and even twitter (here called twitpher :)). Of course, however, there are also those files I used to find 15 years ago when browsing the early web from a text terminal with lynx: guides for n00bs about the Internet, hacking tutorials, ebooks I did not know about (the one I like most is Albert Einstein's theory of relativity in words of four letters or less, that you can find here in HTML). Moreover, there's still people willing to use Gopher as a mean to share their stuff, or to provide useful services for other users: FTP servers (built on a cluster of Playstation 3 consoles... awesome!) with collections of rare operating systems, LUG servers with mirrors of all the main Linux distros, pages distributing podcasts and blogs (well, phlogs!). Ah, and for all those who don't want to install anything to access gopher there's always the GopherProxy service that can be accessed using any browser.

After seeing all of this, one word came into my mind: WHY? Don't misunderstand me, I think all of this is really cool and an interesting phenomenon to follow and I really love to see all of these people still having incentives in using this old technology. And it is great to see that the incentives, in this case, are not the usual ones you might find in a participative system. I mean, what's one of the main incentives in using Wikipedia? Well, the fact that lots of people will read what you have written (if you don't agree, think about how many people would create new articles in a wiki which is not already as famous as Wikipedia). And how many readers is a page from the Gopherspace going to have? Well, probably not as many as any popular site you can find around the Web. But Gopher, mainly relying on text files, has a very light protocol which is superfast (and cheap!) on a mobile phone. It has no ads. It adds no fuss to the real, interesting content you want to convey to your readers. And quoting the words of lostnbronx from Information Underground:

"... I tell you, there's something very liberating about not having to worry over "themes" or "Web formatting" or whatever. When you use gopher, you drop your files onto the server, maybe add a notation to a gophermap if you're using one (which is purely optional), and...that's it. No muss, no fuss, no dicking around with CMS, CSS, stylesheets, or even HTML. Unless you want to. Which I don't. It defeats the purpose, see?"

Aaahh... so much time passed since the last time I have heard such wise words... It is like coming back to my good old 356* and listening to its +players! Let me tell you this, I like these ideas and I am so happy to see this new old Gopher still looks so far from being trendy... Because this means that a lot of time will need to pass before commercial idiots start polluting it! And in the meanwhile, it will be nice to have a place where information can be exchanged in a simple and unexpensive way. Maybe we in the richest part of the world do not realize it, but there are still many places where older but effective technologies are widely used (some examples? Check this one about Nokia most popular phone, and read why we still have USENET), and if something like Gopher could be a solution in this case, well... long live Gopher :-)

WOEID to Wikipedia reconciliation

For a project we are developing at PoliMI/USI, we are using Yahoo! APIs to get data (photos and tags associated to these photos) about a city. We thought it would be nice to provide, together with this information, also a link or an excerpt from the Wikipedia page that matches the specific city. However, we found that the matching between Yahoo's WOEIDs and Wikipedia articles is far from trivial...

First of all, just two words on WOEIDs: they are unique, 32-bit identifiers used within Yahoo! GeoPlanet to refer to all geo-permanent named places on Earth. WOEIDs can be used to refer to differently sized places, from towns to Countries or even continents (i.e. Europe is 24865675). A more in-depth explanation of this can be found in the Key Concepts page within GeoPlanet documentation, and an interesting introductory blog post with examples to play with is available here. Note that, however, you now need a valid Yahoo! application id to test these APIs (which means you should be registered in the Yahoo! developer network and then get a new appid by creating a new project).

One cool aspect of WOEIDs (as for other geographical ids such as GeoNames' ones) is that you can use them to disambiguate the name of a city you are referring to: for instance, you have Milan and you want to make sure you are referring to Milano, Italy and not to the city of Milan, Michigan. The two cities have two different WOEIDs, so when you are using one of them you exactly know which one of the two you are talking about. A similar thing happens when you search for Milan (or any other ambiguous city name) on Wikipedia: most of the times you will be automatically redirected to the most popular article, but you can always search for its disambiguation page (here is the example for Milan) and choose between the different articles that are listed inside it.

Of course, the whole idea of having standard, global, unique identifiers for things in the real world is a great one per se, and being able to use it for disambiguation is only one aspect of it. While disambiguation can be (often, but not always!) easy at the human level, where the context and the background of the people who communicate help them in understanding which entity a particular name refers to, this does not hold for machines. Having unique identifiers saves machines from the need of disambiguating, but also allows them to easily link data between different sources, provided they all use the same standard for identification. And linking data, that is making connections between things that were not connected before, is a first form of inference, a very simple but also a very useful one that allows us to get new knowledge from the one we originally had. Thus, what makes these unique identifiers really useful is not only the fact that they are unique. Uniqueness allows for disambiguating, but is not sufficient to link a data source to others. To do this, identifiers also need to be shared between different systems and knowledge repositories: the more the same id is used across knowledge bases, the easier it is to make connections between them.

What happens when two systems, instead, use different ids? Well, unless somebody decides to map the ids between the two systems, there are few possibilities of getting something useful out of them. This is the reason why the reconciliation of objects across different systems is so useful: once you state that their two ids are equivalent, then you can perform all the connections that you would do if the objects were using the same id. This is the main reason why matching WOEIDs for cities with their Wikipedia pages would be nice, as I wrote at the beginning of this post.

Wikipedia articles are already disambiguated (except, of course, for disambiguation pages) and their names can be used as unique identifiers. For instance, DBPedia uses article names as a part of its URIs (see, for instance, information about the entities Milan and Milan(disambiguation)). However, what we found is that there is no trivial way to match Wikipedia articles with WOEIDs: despite what others say on the Web, we found no 100% working solution. Actually, the ones who at least return something are pretty far from that 100% too: Wikilocation works fine with monuments or geographical elements but not with large cities, while Yahoo! APIs themselves have a direct concordance with Wikipedia pages, but according to the documentation this is limited to airports and towns within the US.

The solution to this problem is a mashup approach, feeding the information returned by a Yahoo! WOEID-based query to another data source capable of dealing with Wikipedia pages. The first experiment I tried was to query DBPedia, searching for articles matching Places with the same name and a geolocation contained in the boundingBox. The script I built is available here (remember: to make it work, you need to change it entering a valid Yahoo! appid) and performs the following SPARQL query on DBPedia:

SELECT DISTINCT ?page ?fbase WHERE {

?city a <http://dbpedia.org/ontology/Place> .

?city foaf:page ?page .

?city <http://www.w3.org/2003/01/geo/wgs84_pos#lat> ?lat .

?city <http://www.w3.org/2003/01/geo/wgs84_pos#long> ?long .

?city rdfs:label ?label .

?city owl:sameAs ?fbase .

FILTER (?lat > "45.40736"^^xsd:float) .

FILTER (?lat < "45.547058"^^xsd:float) .

FILTER (?long > "9.07683"^^xsd:float) .

FILTER (?long < "9.2763"^^xsd:float) .

FILTER (regex(str(?label), "^Milan($|,.*)")) .

FILTER (regex(?fbase, "http://rdf.freebase.com/ns/")) .

}

Basically, what it gets are the Wikipedia page and the Freebase URI for a place called "like" the one we are searching, where "like" means either exactly the same name ("Milan") or one which still begins with the specified name but is followed by a comma and some additional text (i.e. "Milan, Italy"). This is to take into account cities whose Wikipedia page name also contains the Country they belong to. Some more notes are required to better understand how this works:

- I am querying for articles matching "Places" and not "Cities" because on DBPedia not all the cities are categorized as such (data is still not very consistent);

- I am matching rdfs:label for the name of the City, but unfortunately not all cities have such a property;

- requiring the Wikipedia article to have equivalent URIs related with the owl:sameAs property is kind of strict, but I saw that most of the cities had not only one such URI, but also most of the times the one from Freebase I was searching for.

This solution, of course, is still kind of naive. I have tested it with a list WOEIDs of the top 233 cities around the world and its recall is pretty bad: out of 233 cities the empty results were 96, which corresponds to a recall lower than 60%. The reasons of this are many: sometimes the geographic coordinates of the cities in Wikipedia are just out of the bounding box provided by GeoPlanet; other times the city name returned by Yahoo! does not belong to any of the labels provided by DBPedia, or no rdfs:label property is present at all; some cities are not even categorized as Places; very often accents or alternative spellings make the city name (which usually is returned by Yahoo! without special characters) untraceable within DBPedia; and so on.

Trying to find an alternative approach, I reverted to good old Freebase. Its api/service/search API allows to query the full text index of Metaweb's content base for a city name or part of it, returning all the topics whose name or alias match it and ranking them according to different parameters, including their popularity in Freebase and Wikipedia. This is a really powerful and versatile tool and I suggest everyone who is interested in it to check its online documentation to get an idea about its potential. The script I built is very similar to the previous one: the only difference is that, after the query to Yahoo! APIs, it queries Freebase instead of DBPedia. The request it sends to the search API is like the following one:

where (like in the previous script) city name and bounding box coordinates are provided by Yahoo! APIs. Here are some notes to better understand the API call:

- the city name is provided as the query parameter, while type is set to /location/citytown to get only the cities from Freebase. In this case, I found that every city I was querying for was correctly assigned this type;

- the mql_output parameter specifies what you want in Freebase's response. In my case, I just asked for Wikipedia ID (asking for the "key" whose "namespace" was /wikipedia/en_id). Speaking about IDs, Metaweb has done a great job in reconciliating entities from different sources and already provides plenty of unique identifiers for its topics. For instance, we could get not only Wikipedia and Freebase own IDs here, but also the ones from Geonames if we wanted to (this is left to the reader as an exercise ;)). If you want to know more about this, just check the Id documentation page on Freebase wiki;

- the mql_filter parameter allows you to specify some constraints to filter data before they are returned by the system. This is very useful for us, as we can put our constraints on geographic coordinates here. I also specified the type /location/location to "cast" results on it, as it is the one which has the geolocation property. Finally, I repeated the constraint on the Wikipedia key which is also present in the output, as not all the topics have this kind of key and the API wants us to filter them away in advance.

Luckily, in this case the results were much more satisfying: only 9 out of 233 cities were not found, giving us a recall higher than 96%. The reasons why those cities were missing follow:

- three cities did not have the specified name as one of their alternative spellings;

- four cities had non-matching coordinates (this can be due either to Metaweb's data or to Yahoo's bounding boxes, however after a quick check it seems that Metaweb's are fine);

- two cities (Buzios and Singapore) just did not exist as cities in Freebase.

The good news is that, apart from the last case, the other ones can be easily fixed just by updating Freebase topics: for instance one city (Benidorm) just did not have any geographic coordinates, so (bow to the mighty power of the crowd, and of Freebase that supports it!) I just added them taking the values from Wikipedia and now the tool works fine with it. Of course, I would not suggest anybody to run my 74-lines script now to reconciliate the WOEIDs of all the cities in the World and then manually fix the empty results, however this gives us hope on the fact that, with some more programming effort, this reconciliation could be possible without too much human involvement.

So, I'm pretty satisfied right now. At least for our small project (which will probably become the subject of one blog post sooner or later ;)) we got what we needed, and then who knows, maybe with the help of someone we could make the script better and start adding WOEIDs to cities in Freebase... what do you think about this?

I have prepared a zip file with all the material I talked about in this post, so you don't have to follow too many links to get all you need. In the zip you will find:

- woe2wp.pl and woe2wpFB.pl, the two perl scripts;

- test*.pl, the two test scripts that run woe2wp or woe2wpFB over the list of WOEIDs provided in the following file;

- woeids.txt, the list of 233 WOEIDs I tested the scripts with;

- output*.txt, the (commented) outputs of the two test scripts.

Here is the zip package. Have fun ;)

Javascript scraper basics

As you already know, I am quite much into scraping. The main reason is that I think that what is available on the Internet (and in particular on the Web) should be consumed not only in the way it is provided, but also in a more customized one. We should be able to automatically gather information from different sources, integrate it, and obtain new information as the result of the elaboration of what we found.

Of course, this is highly related to my research topic, that is the Semantic Web. In the SW we suppose we already have data in formats that are easy for a machine to automatically consume, and a good part of the efforts of the SW research community are directed towards enabling standards and technologies that allow us to publish information this way. Of course, even if we made some progresses in this, there are still a couple of problems:

- the casual Internet user does not (and does not want to) know about these technologies and how to use them, so the whole "semantic publishing" process should be made totally invisible to her;

- most of the data that has been already provided on the Web in the last 20 years does not follow these standards. It has been produced for human consumption and cannot be easily parsed in an automatic way.

Fortunately, some structure in data exists anyway: information written in tables, infoboxes, or generated by a software from structured data (i.e. data saved in a database), typically shows a structure that, albeit informal (that is, not following a formal standard), can be exploited to get the original structured data back. Scrapers rely on this principle to extract relevant information from Web pages and, if provided a correct "crawling plan" (I'm makin' up this name, but I think you can easily understand what I mean ;)), they can more or less easily gather all the contents we need, reconstructing the knowledge we are interested in and allowing us to perform new operations on it.

There are, however, cases in which this operation is not trivial. For instance, when the crawling plan is not easy to reproduce (i.e. contents belong to pages which do not follow a well defined structure). Different problems happen when the page is generated on the fly by some javascript code, thus making HTML source code parsing unuseful. This reminds me much about dynamically generated code as a software protection: when we moved from statically compiled code to one that was updating itself at runtime (such as with packed or encrypted executables), studying a protection on its "dead listing" became more difficult and so we had to change our tools (from simple disassemblers to debuggers or interactive disassemblers) and approaches... but that is, probably, another story ;-) Finally, we might want to provide an easier, more interactive approach to Web scraping that allows users to dynamically choose the page they want to analyze while they are browsing it, and that does not require them to switch to another application to do this.

So, how can we run scrapers dynamically, on the page we are viewing, even if part of its contents have been generated on the fly? Well, we can write few lines of Javascript code and run it from our own browser. Of course there are already apps that allow you to do similar things very easily (anyone said Greasemonkey?), but starting from scratch is a good way to do some practice and learn new things... then you'll always be able to revert to GM later ;-) So, I am going to show you some very easy examples of Javascript code that I have tested on Firefox. I think they should be simple enough to run from other browsers too... well, if you have any problem with them just let me know.

First things first: as usual I'll be playing with regular expressions, so check the Javascript regexp references at regular-expressions.info and javascriptkit.com (here and here) and use the online regex tester tool at regexpal.com. And, of course, to learn basics about bookmarklets and get an idea about what you can do with them check Fravia's and Ritz's tutorials. Also, check when these tutorials have been written and realize how early they understood that Javascript would have changed the way we use our browsers...

Second things second: how can you test your javascript code easily? Well, apart from pasting it inside your location bar as a "javascript:" oneliner (we'll get to that later when we build our bookmarklets), I found very useful the "jsenv" bookmarklet at squarefree: just drag the jsenv link to your bookmark toolbar, click on the new bookmark (or just click on the link appearing in the page) and see what happens. From this very rudimental development environment we'll be able to access the page's contents and test our scripts. Remember that this script is able to access only the contents of the page that is displayed when you run it, so if you want it to work with some other page's contents you'll need to open it again.

Now we should be ready to test the examples. For each of them I will provide both some "human readable" source code and a bookmarklet you can "install" as you did with jsenv.

Example 1: MySwitzerland.com city name extractor

This use case is a very practical one: for my work I needed to get a list of the tourist destinations in Switzerland as described within MySwitzerland.com website. I knew the pages I wanted to parse (one for every region in Switzerland, like this one) but I did not want to waste time collecting them. So, the following quick script helped me:

var results="";

var content = document.documentElement.innerHTML;

var re = new RegExp ("<div style=\"border-bottom:.*?>([^<]+)</div>","gi");

while (array = re.exec(content)){

results += array[1]+"<br/>";

}

document.documentElement.innerHTML=results;

Just few quick comments about the code:

- line 2 gets the page content and saves it in the "content" variable

- line 3 initializes a new regular expression (whose content and modifiers are defined as strings) and saves it in the "re" variable

- line 4 executes the regexp on the content variable and, as long as it returns matches, it saves them in the "array" variable. As the matching group (the ones defined within parentheses inside the regexp) is only one, the results of the matches will be saved inside array[1]

- in line 5 the "results" variable, which was initialized as an empty string in line 1, is appended the result of the match plus "<br/>"

- in the last line, the value of "results" is printed as the new Web page content

The bookmarklet is here: MySwitzerland City Extractor.

Example 2: Facebook's phonebook conversion tool

This example shows how to quickly create a CSV file out of Facebook's Phonebook page. It is a simplified version that only extracts the first phone number for every listed contact, and the regular expression is kind of redundant but is a little more readable and should allow you, if you want, to easily find where it matches within the HTML source.

var output="";

content = document.documentElement.innerHTML;

var re = new RegExp ("<div class=\"fsl fwb fcb\">.*?<a href=\"[^\"]+\">([^<]+)<.*?<div class=\"fsl\">([^<]+)<span class=\"pls fss fcg\">([^<]+)</span>", "gi");

while (array = re.exec(content)){

output += array[1]+";"+array[2]+";"+array[3]+"<br/>";

}

document.documentElement.innerHTML = output;

As you can see, the structure is the very same of the previous example. The only difference is in the regular expression (that has three matching groups: one for the name, one for the phone number, and one for the type, i.e. mobile, home, etc.) and, obviously, in the output. You can get the bookmarklet here: Facebook Phonebook to CSV.

Example 3: Baseball stats

This last example shows you how you can get new information from a Web page just by running a specific tool on it: data from the Web page, once extracted from the HTML code, becomes the input for an analysis tool (that can be more or less advanced - in our case is going to be trivial) that returns its output to the user. For the example I decided to calculate an average value from the ones shown in a baseball statistics table at baseball-reference.com. Of course I know nothing about baseball so I just got a value even I could understand, that is the team members' age :) Yes, the script runs on a generic team roster page and returns the average age of its members... probably not very useful, but should give you the idea. Ah, and speaking of this, there are already much more powerful tools that perform similar tasks like statcrunch, which automatically detects tables within Web pages and imports them so you can calculate statistics over their values. The advantage of our approach, however, is that it is completely general: any operation can be performed on any piece of data found within a Web page.

The code for this script follows. I have tried to be as verbose as I could, dividing data extraction in three steps (get team name/year, extract team members' list, get their ages). As the final message is just a string and does not require a page on its own, the calculated average is returned within an alert box.

var content, payrolls, team, count, sum, avg, re;

var content = document.documentElement.innerHTML;

// get team name/year

re = new RegExp ("<h1>([^<]+)<", "gi");

if (array = re.exec(content)){

team = array[1];

}

// extract "team future payrolls" to get team members' list

re = new RegExp ("div_payroll([\\s\\S]+?)appearances_table", "i");

if (array = re.exec(content)){

payrolls = array[1];

}

sum=0;count=0;

// extract members' ages and sum them

re = new RegExp ("</a></td>\\s+<td align=\"right\">([^<]+)</td>", "gi");

while (array = re.exec(payrolls)){

sum += parseInt(array[1]);

count++;

}

alert("The average age of "+team+" is "+sum/count+" out of "+count+" team members");

The bookmarklet for this example is available here: Baseball stats - Age.

Conclusions

In this article I have shown some very simple examples of Javascript scrapers that can be saved as bookmarklets and run on the fly while you are browsing specific Web pages. The examples are very simple and should both give you an idea about the potentials of this approach and teach you the basics of javascript regular expressions so that you can apply them in other contexts. If you want to delve deeper into this topic, however, I suggest you to give a look at Greasemonkey and its huge repository of user-provided scripts: that is probably the best way to go if you want to easily develop and share your powerbrowsing tools.

Perl Hacks: automatically get info about a movie from IMDB

Ok, I know the title sounds like "hey, I found an easy API and here's how to use it"... well, the post is not much more advanced than this but 1) it does not talk about an API but rather about a Perl package and 2) it is not so trivial, as it does not deal with clean, precise movie titles, but rather with another kind of use case you might happen to witness quite frequently in real life.

Yes, we are talking about pirate video... and I hope that writing this here will bring some kids to my blog searching for something they won't find. Guys, go away and learn to search stuff the proper way... or stay here and learn something useful ;-)

So, the real life use case: you have a directory containing videos you have downloaded or you have been lent by a friend (just in case you don't want to admit you have downloaded them ;-). All the titles are like

The.Best.Movie.Ever(2011).SiLeNT.[dvdrip].md.xvid.whatever_else.avi

and yes, you can easily understand what the movie title is and automatically remove all the other crap, but how can a software can do this? But first of all, why should a software do this?

Well, suppose you are not watching movies from your computer, but rather from a media center. It would be nice if, instead of having a list of ugly filenames, you were presented a list of film posters, with their correct titles and additional information such as length, actors, etc. Moreover, if the information you have about your video files is structured you can easily manage your movie collection, automatically categorizing them by genre, director, year, or whatever you prefer, thus finding them more easily. Ah, and of course you might just be interested in a tool that automatically renames files so that they comply with your naming standards (I know, nerds' life is a real pain...).

What we need, then, is a tool that links a filename (that we suppose contains at least the movie title) to, basically, an id (like the IMDB movie id) that uniquely identifies that movie. Then, from IMDB itself we can get a lot of additional information. The steps we have to perform are two:

- convert an almost unreadable filename in something similar to the original movie title

- search IMDB for that movie title and get information about it

Of course, I expect that not all the results are going to be perfect: filenames might really be too messy or there could be different movies with the same name. However, I think that having a tool that gives you a big help in cleaning your movie collection might be fine even if it's not perfect.

Let's start with point 1: filename cleaning. First of all, you need to have an idea about how files are actually named. To find enough examples, I just did a quick search on google for divx dvdscr dvdrip xvid filetype:txt: it is surprising how many file lists you can find with such a small effort :) Here are some examples of movie titles:

American.Beauty.1999.AC3.iNTERNAL.DVDRip.XviD-xCZ Dodgeball.A.True.Underdog.Story.SVCD.TELESYNC-VideoCD Eternal.Sunshine.Of.The.Spotless.Mind.REPACK.DVDSCr.XViD-DvP

and so on. All the additional keywords that you find together with the movie's title are actually useful ones, as they detail the quality of the video (i.e. svcd vs dvdrip or screener), the group that created it, and so on. However we currently don't need them so we have to find a way to remove them. Moreover, a lot of junk such as punctuation and parentheses are added to most of the file names, so it is very difficult to understand when the movie title ends and the rest begins.

To clean this mess, I have first filtered everything so that only alphanumeric characters are kept and everything else is considered as a separator (this became the equivalent of the tokenizing phase of a document indexer). Then I built a list of stopwords that need to be automatically removed from the filename. The list has been built from the very same file I got the titles from (you can find it here or, if the link is dead, download the file ALL_MOVIES here). Just by counting the occurences of each word with a simple perl script, I came out with a huge list of terms I could easily remove: to give you an idea of it, here's an excerpt of all the terms that occur at least 10 times in that file

129 -> DVDRip 119 -> XviD 118 -> The 73 -> XViD 35 -> DVDRiP 34 -> 2003 33 -> REPACK DVDSCR 30 -> SVCD the 24 -> 2 22 -> xvid 20 -> LiMiTED 19 -> DVL dvdrip 18 -> of A DMT 17 -> LIMITED Of 16 -> iNTERNAL SCREENER 14 -> ALLiANCE 13 -> DiAMOND 11 -> TELESYNC AC3 DVDrip 10 -> INTERNAL and VideoCD in

As you can see, most of the most frequent terms are actually stopwords that can be safely removed without worrying too much about the original titles. I started with this list and then manually added new keywords I found after the first tests. The results are in my blacklist file. Finally, I decided to apply another heuristic to clean filenames: every time a sequence of token ends with a date (starting with 19- or 20-) there are chances that it is just the movie date and not part of the title; thus, this date has to be removed too. Of course, if the title is made up of only that date (i.e. 2010) it is kept. Summarizing, the Perl code used to clean filenames is just the following one:

sub cleanTitle{

my ($dirtyTitle, $blacklist) = @_;

my @cleanTitleArray;

# HEURISTIC #1: everything which is not alphanumeric is a separator;

# HEURISTIC #2: if an extracted word belongs to the blacklist then ignore it;

while ($dirtyTitle =~ /([a-zA-Z0-9]+)/g){

my $word = lc($1); # blacklist is lowercase

if (!defined $$blacklist{$word}){

push @cleanTitleArray, $word;

}

}

# HEURISTIC #3: often movies have a date (year) after the title, remove

# that (if it is not the title of the movie itself!);

my $lastWord = pop(@cleanTitleArray);

my $arraySize = @cleanTitleArray;

if ($lastWord !~ /(19\d\d)|(20\d\d)/ || !$arraySize){

push @cleanTitleArray, $lastWord;

}

return join (" ", @cleanTitleArray);

}

Step 2, instead, consists in sending the query to IMDB and getting information back. Fortunately this is a pretty trivial step, given we have IMDB::Film available! The only thing I had to modify in the package was the function that returns alternative movie titles: it was not working and I also wanted to customize it to return a hash ("language"=>"alt title") instead of an array. I started from an existing patch to the (currently) latest version of IMDB::Film that you can find here, and created my own Film.pm patch (note that the patch has to be applied to the original Film.pm and not to the patched version described in the bug page).

Access to IMDB methods is already well described in the package page. The only addition I made was to my script was the getAlternativeTitle function, which gets all the alternative titles for a movie and returns, in order of priority, the Italian one if it exists, otherwise the international/English one. This is the code:

sub getAlternativeTitle{

my $imdb = shift;

my $altTitle = "";

my $aka = $imdb->also_known_as();

foreach $key (keys %$aka){

# NOTE: currently the default is to return the Italian title,

# otherwise rollback to the first occurrence of International

# or English. Change below here if you want to customize it!

if ($key =~ /^Ital/){

$altTitle = $$aka{$key};

last;

}elsif ($key =~ /^(International|English)/){

$altTitle = $$aka{$key};

}

}

return $altTitle;

}

So, that's basically all. The script has been built to accept only one parameter on the command line. For testing, if the parameter is recognized as an existing filename, the file is opened and parsed for a list of movie titles; otherwise, the string is considered as a movie title to clean and search.

Testing has been very useful to understand if the tool was working well or not. The precision is pretty good, as you can see from the attached file output.txt (if anyone wants to calculate the percentage of right movies... Well, you are welcome!), however I suspect it is kind of biased towards this list, as it was the same one I took the stopwords from. If you have time to waste and want to work on a better, more complete stopwords list I think you have already understood how easy it is to create one... please make it available somewhere! :-)

The full package, with the original script (mt.pl) and its data files is available here. Enjoy!

Perl Hacks: infogain-based term cloud

This is one of the very first tools I have developed in my first year of post-doc. It took a while to publish it as it was not clear what I could disclose of the project that was funding me. Now the project has ended and, after more than one year, the funding company still has not funded anything ;-) Moreover, this is something very far from the final results that we obtained, so I guess I could finally share it.

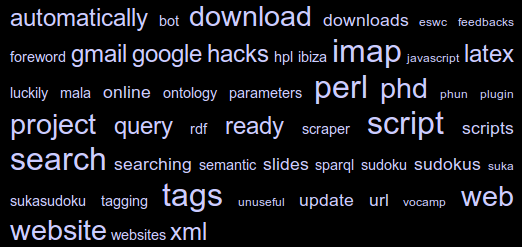

Rather than a real research tool, this is more like a quick hack that I built up to show how we could use Information Gain to extract "interesting" words from a collection of documents, and term frequencies to show them in a cloud. I have called it a "term cloud" because, even if it looks like the well-known tag clouds, it is not built up with tags but with terms that are automatically extracted from a corpus of documents.

The tool is called "rain" as it is based on rainbow, an application built on top of the "bow" libraries that performs statistical text classification. The basic idea is that we use two sets of documents: the training set is used to instruct the system about what can be considered "common knowledge"; the test set is used to provide documents about the specific domain of knowledge we are interested in. The result is that the words which more likely discriminate the test set from the training one are selected, and their occurrences are used to build the final cloud.

All is done within a pretty small perl script, which does not do much more than calling the rainbow tool (which has to be installed first!) and using its output to perform calculation and build an HTML page with the generated cloud. You can download the script from these two locations:

- here you can find a barebone version of the script, which only contains the script and few test documents collections. The tool works (that is it does not return errors) even without training data, but will not perform fine unless it is properly trained. You will also have to download and install rainbow before you can use it;

- here you can find an "all inclusive" version of the script. It is much bigger but it provides: the ".deb" file to install rainbow (don't be frightened by its release date, it still works with Ubuntu Maverick!), and a training set built by collecting all the posts from the "20_newsgroups" data set.

How can I run the rain tool?

Supposing you are using the "all inclusive" version, that is you already have your training data and rainbow installed, running the tool is easy as writing

perl rain.pl <path_to_test_dir>

The script parameters can be modified within the script itself (see the following excerpt from the script source):

my $TERMS = 50; # size of the pool (top words by infogain)

my $TAGS = 50; # final number of tags (top words by occurrence)

my $SIZENUM = 6; # number of size classes to be used in the HTML document,

# represented as different font sizes in the CSS

my $FIREFOX_BIN = '/usr/bin/firefox'; # path to browser binary

# (if present, firefox will be called to open the HTML file)

my $RAINBOW_BIN = '/usr/bin/rainbow'; # path to rainbow binary

my $DIR_MODEL = './results/model'; # used internally by rainbow

my $DIR_DATA = './train'; # path to training dir

my $DIR_TEST = $ARGV[0]; # path to test dir

my $FILE_STOPLIST = './stopwords.txt'; # stopwords file

my $FILE_TMPDATA = './results/data.txt'; # file where data generated

# by rainbow will be dumped

my $HTML_TEMPLATE = './template.html'; # template file used to generate the

# tag-cloud html page

my $HTML_OUTPUT = './results/output.html'; # final html page

As you can see, there are quite a lot of parameters but the script can also be run just out of the box: for instance, if you type

perl rain.pl ./test/folksonomies

you will see the cloud shown in Figure 1. And now, here is another term cloud built using my own blog posts as a text corpus:

Pirate Radio: Let your voice be heard on the Internet

[Foreword: this is article number 4 of the new "hacks" series. Read here if you want to know more about this.]

"I believe in the bicycle kicks of Bonimba, and in Keith Richard's riffs": for whoever recognizes this movie quote, it will be easy to imagine what you could feel when you speak on the radio, throwing in the air a message that could virtually be heard by anyone. Despite all the new technologies that came after the invention of the radio, its charm has remained the same; moreover, the evolution of the Internet has given us a chance to become deejays, using simple softwares and broadcasting our voice on the Net instead of using radio signals. So, why don't we use these tools to create our "pirate radio", streaming non-copyrighted music and information free from the control of the big media?

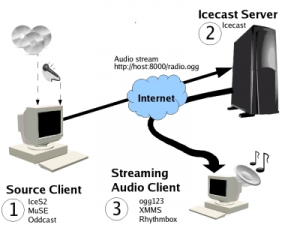

Figure 1: Streaming servers work as antenna towers for the signals you send them, broadcasting your streams to all the connected users.

Technical details

It is not too difficult to understand how a streaming radio works (see Figure 1): everyhing you need is a streaming server, which receives an audio stream from your computer and makes this stream available to all its listeners. This is the most versatile solution and allows anyone, even with a simple 56kbps modem, to broadcast without bandwidth problems. The only limit is that you need a server to send your stream to: fortunately, many servers are available for free and it is pretty easy to find a list of them online (for instance, at http://www.radiotoolbox.com/hosts). A solution which is a little more complex but that allows you to be completely autonomous is to install a streaming audio server on your own machine (if you have one which is always connected to the Internet), so you'll be your own broadcaster. Of course, in this case the main limit is the bandwidth: an ADSL is more than enough if you don't have many listeners, but you might need something more powerful if the number of listeners increases. If creating a Web radio is not a trivial task (we will actually need to setup a streaming server and an application to send the audio stream to it), listening to it is very easy: most of the audio applications currently available (i.e. Media Player, Winamp, XMMS, VLC) are able to connect and play an audio stream given its URL.

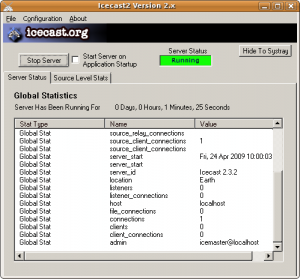

Install the software

The most famous technologies in audio streaming are currently two: SHOUTCast (http://www.shoutcast.com) and Icecast (http://icecast.org). The first one is proprietary and the related software is closed source, even if it is distributed for free; the second one, instead, is based on an opensource server and supports different third-party applications which are also distributed with free licenses. Even if Shoutcast is somehow easier to use (actually, the software is basically integrated within Winamp), our choice has fallen on Icecast as it is far more versatile. The Icecast server is available both for Windows and Linux: the Windows version has a graphical interface while the Linux one runs as a service; in both cases, the configuration can be managed through a text file called icecast.xml. Most of the settings can be left untouched, however it is a good practice to change the default password (which is "hackme") with a custom one inside the authentication section. Once you have installed and configured your server you can run it, and it will start waiting for connections.

The applications you can use to connect to Icecast to broadcast audio are many and different in genre and complexity. Between the ones we tested, we consider the following as the most interesting ones:

- LiveIce is a client that can be used as an XMMS plugin. Its main advantage is the simplicity: in fact, you just need to play mp3 files with XMMS to automatically send them to the Icecast server;

- OddCast, which is basically the equivalent of LiveIce for Winamp;

- DarkIce, a command line tool that directly streams audio from a generic device to the server. The application is at the same time mature and still frequently updated, and the system is known to be quite stable.

- Muse, a much more advanced tool, which is able to mix up to six audio channels and the "line in" of your audio card, and also to save the stream on your hard disk so you can reuse it (for instance creating a podcast);

- DyneBolic, finally, is a live Linux distribution which gives you all the tools you might need to create an Internet radio: inside it, of course, you will also find IceCast and Muse.

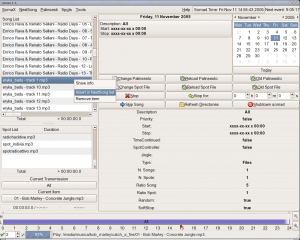

Manage the programs schedule

One of the main differences between an amateur radio and a professional one is the management of the programs schedule: the tools we have described till now, in fact, are not able to manage the programs depending on the current time or to play songs as a filler between different programs. Soma Suite is an application which can solve this problem, as it is able to create programs of different types: playlists (even randomly generated ones), audio streams (yours or taken from other radios), files, and so on.

Leave a trace on the Net

One of the main requirements for something worth calling a radio is to have listeners. However, how can we be heard if nobody knows us yet? Of course not broadcasting our IP address (maybe even a dynamic one!) every time we decide to stream. Luckily, there are a couple of solutions to this problem. The first one consists in advertising your radio inside some well-known lists: in fact, you can configure your Icecast server so that you can automatically send your current IP address to one of these lists whenever you run it. Icecast itself provides one of these listings, but of course you can choose different ones (even at the same time) to advertise your stream. The second solution consists in publishing your programs online using podcasts: this way, even those who could not follow you in realtime will be able to know you and tune at the right time to listen your next transmission.

Now that you have a radio, what can you broadcast? Even if the temptation of playing copyrighted music regardless of what majors think might be strong, why shall we do what any other website (from youtube to last.fm) is already doing? Talk, let your ideas be heard from the whole world and when you want to provide some good music choose free alternatives such as the ones you find here and here.

Request for Comments: learn Internet standards by reading the documents that gave them birth

[Foreword: this is article number 3 of the new "hacks" series. Read here if you want to know more about this. A huuuuuge THANX to Aliosha who helped me with the translation of this article!]

A typical characteristic of hackers is the desire to understand -in the most intimate details- the way any machinery works. From this point of view, Internet is one of the most interesting objects of study, since it offers a huge variety of concepts to be learnt: just think how many basic formats and standards it relies on... And all the nice hacks we could perform once we understand the way they work!

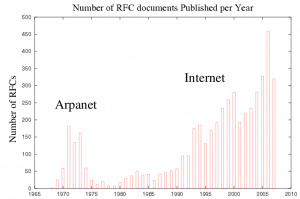

Luckily, most of these standards are published in freely accessible and easily obtainable notes: these documents are called RFC (Request for Comments), and have been used for almost 40 years to share information and observations regarding Internet formats, technologies, protocols and standards. The first RFC goes up to 1969, and since then more than 5500 have been published. Each one of them has to pass a difficult selection process lead by IETF (Internet Engineering Task Force), whose task is -as described in RFC 3935 and 4677- "to manage the Internet in such a way as to make the Internet work better."

John Postel, one of the authors of the first RFC and contributor to the project for 28 years, has always typed on his keyboard using only two fingers :-)

The RFC format

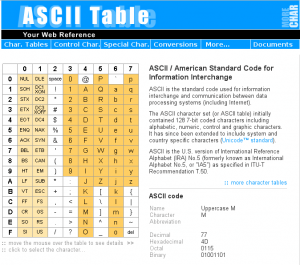

In order to become a RFC, a technical document must above all follow a very strict standard. At a first glance, it strikes us for its stark outlook: a simple text file with 73 columns, exclusively formatted with standard ASCII chars. On a second thought, it is easy to understand the reason of this choice: what format did not change since 1969 and can be visualized on any computer, no matter how old it is or which OS it runs?

Despite of the fact that many standards are now more than stable, the number of published RFCs is always increasing.

Every RFC has a header with information especially important for the reader. In addition to title, date and authors, there is also the unique serial number of the document, the relations with preceding documents, and its category. For example (see figure below), the most recent RFC that describes the SMTP protocol is 5321, updating RFC 1123, making 2821 obsolete, belonging to the "Standard Track" category. Similarly, if we read that a document has been "obsoleted" by another, it is better to look for this other one, since it will contain more up to date information.

The categories of RFCs are several, depending to the level of standardization reached by the described protocol or format at the moment of publication. The documents considered as the most official ones are split in three main categories: well-established standards (standard), drafts (draft) and standard proposals (proposed). There are also three non-standard classes, including experimental documents (experimental), informative ones (informational), and historical ones (historic). Finally, there is an "almost standard" category, containing the Best Current Practices (BCP), that is all those non official practices that are considered the most logical to adopt.

Finding the document we want

Now that we understand the meaning of RFC associated metadata (all those data not pertaining to the content of the document but to the document itself), we only have to take a peek inside the official IETF archive to see if there is information of interest for us. There are several methods to find an RFC: the first -and simplest- can be used when we know the document serial number and consists in opening the address http://www.ietf.org/rfc/rfcxxxx.txt, where xxxx is that number. For instance, the first RFC in computer history is available at http://www.ietf.org/rfc/rfc0001.txt.

Another search approach consists in starting from a protocol name and searching for all the documents that are related to it. To do this, we can use the list of Official Internet Protocol Standards that is available at http://www.rfc-editor.org/rfcxx00.html. Inside this list you can find the acronyms of many protocols, their full names and the standards they are related to: for instance, IP, ICMP, and IGMP protocols are described in different RFCs but they are all part of the same standard (number 5).

Finally, you can search documents according to their status or category: at http://www.rfc-editor.org/category.html you can find an index of RFCs ordered by publication status and, for each section, updated documents appear as black while obsolete ones are red, together with the id of the RFC which obsoleted them.

The tools we have just described should be enough in most of the cases: in fact, we usually know at least the name of the protocol we want to study, if not even the code of the RFC where it is described. However, whenever we just have a vague idea of the concepts that we want to learn, we can use the search engine available at http://www.rfc-editor.org/rfcsearch.html. If, for instance, we want to know something more about the encoding used for mail attachments, we can just search for "mail attachment" and obtain as a result the list of titles of the RFCs which deal with this topic (in this case, RDF 2183).

What should I read now?

When you have an archive like this available, the biggest problem you have to face is the huge quantity of information: a life is not enough to read all of these RFCs! Search options, fortunately, might help in filtering away everything which is not interesting for us. However, which could be good starting points for our research?

If we don't know where to begin, having a look at the basic protocols is always a good way to start: you can begin from the easiest, higher-level ones, such as the ones regarding email (POP3, IMAP, and SMTP, already partially described here), the Web (HTTP), or other famous application-level protocols (FTP, IRC, DNS, and so on). Transport and network protocols, such as TCP and IP, are more complicated but not less interesting than the others.

If, instead, you are searching for something simpler you can check informative RFCs: they actually contain many interesting documents, such as RFC 2151 (A Primer On Internet and TCP/IP Tools and Utilities) and 2504 (Users' Security Handbook). April Fool's documents deserve a special mention, being funny jokes written as formal RFCs (http://en.wikipedia.org/wiki/April_Fools%27_Day_RFC). Finally, if you still have problems with English (so, why are you reading this? ;-)) you might want to search the Internet for RFCs translated in your language. For instance, at http://rfc.altervista.org, you can find the Italian version of the RFCs describing the most common protocols.

Hacking Challenge: challenge hackers in a skill game

[Foreword: this is article number 2 of the new "hacks" series. Read here if you want to know more about this]

[Foreword 2: if you know me, you also know I usually don't use the term "hacker" lightly. I'm sure you will understand what I mean here without being offended, whether you are a (real) hacker or not ;-)]

In the latest years, also thanks to the fact that website creation has become a much easier and quicker task, the number of hacking challenges on the Internet has considerably increased. These websites usually consist of a series of riddles or puzzles, published by increasing difficulty; solving one of these riddles you can gain points or advance to higher levels where you can access some new resources inside the website. Riddles and puzzles, of course, are "tailored for hackers": the knowledge required to participate, in fact, covers a little bit of everything technical, from scripting languages to cryptography, from reverse engineering to Internet search techniques. Participating to these challenges is a very interesting experience, not only because it is instructive, but also because it allows you to network with other people with your same passion. And, after all, some narcisism doesn't hurt: most of the hacking challenges you can find around the Web also have a "hall of fame", inside which you can see the (nick)names of the hackers who reached the highest scores.

Figure 1: The website http://ascii-table.com provides an ASCII table with dec, hex, octal, and binary codes, together with a collection of tools to convert text in different formats. You'll be surprised at how much this can be useful for you.

Create new riddles

If participating to a hacking challenge as a player is really funny, letting people play your own challenge could be really awesome. Becoming "riddlers" is not particularly complicated from a technical viewpoint: everything you need is some time, together with lots of creativity.

The main idea is that the final answer to a riddle could always be summarized as a simple string of text: in the easiest case it could be a name, in the most complex it could become a (more or less long) sequence of apparently random characters. The easiest way to check if the string is right is to use it as part of the URL of the page containing the following riddle. You can ask users to manually type this URL inside their browsers or use some Javascript code to automatically generate it: if the answer is right the correct page will be loaded, otherwise the Web server will return an error message. More advanced methods to check riddle solutions involve the use of scripting languages (such as Perl, PHP, or Python) and passwords saved inside a file or a database.

The tools

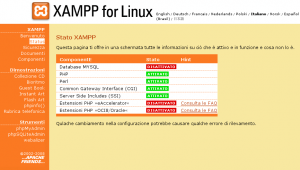

Whatever your choice is, you will not have many problems in finding the Web space and the software you need to create your own hacking challenge. Actually, there are currently lots of free Web space providers available and many of them also give you the chance to run scripts or store your data inside databases. You can also practice by creating a test environment locally on your PC, using ready LAMP (Linux+Apache+PHP+MySQL) packages. For instance, XAMPP (http://www.apachefriends.org/it/xampp.html) is an Apache distribution that comes together with PHP, MySQL, and Perl: the installation procedure has been designed to be as simple as possible and in few minutes you'll be able to start experimenting with your site.

Figure 2: XAMPP is one of the quickest way to run a LAMP servers on your Windoze, Linux, or Mac computer.

Find inspiration

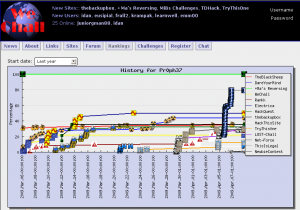

Before you build a new riddle you'd better gather some information, checking what has already been created and what in general you like most. The Web is full of hacking challenges you might get some inspiration from, however finding the one which might be more interesting for you is a riddle on its own. To help you in the choice, instead of searching for challenges on classical search engines you might better start from some more specific websites. Hackergames.net is a "historical" portal for this genre, with links to about 150 different challenges: for each of them you can find details such as the main language, a description, and a list of reviews written by users themselves. We Chall, instead, despite having links to less challenges, has introduced a whole new API-like communication system between websites; using that, it can aggregate scores from different challenges, allowing users to be listed into a global ranking which spans over many sites. Last but not least, keep an eye on all those sites (such as TheBlackSheep) that accept contributions from their own users, as they give you the chance to see your riddles published without requiring you to develop and maintain a website on your own.

Figure 3: We Chall plots, for each user, all the progresses done in every challenge she has subscribed to.

Hacker psychology

The main rule in a hacking challenge worth this name is that there are no rules. If a solution to your riddle is not the one you had envisioned, well... that's a good thing: it means that who found it is more creative than you! Finding alternative ways to reach one goal is a very common hacker approach, so you shouldn't be surprised if, trying to find the solutions to your riddles, somebody tries to exploit your system vulnerabilities. So, here are some suggestions to keep your challenge as funny as possible, both for your players and you:

- check how secure your scripts are, in particular against the most common types of exploit (such as SQL injection, if you save data inside a database);

- do not rely on "security by obscurity", making the security of your website depend on the secrecy of some pieces of information: give for granted the fact that they will be discovered sooner or later and act accordingly. For instance, do not keep all the solutions to your riddles in clear but rather encrypt them, so that whoever finds them will have to sweat a little more to get to the next level;

- one of the simplest, but at the same time effective way to crack a short password is bruteforcing. So, use secret strings which are long and difficult to bruteforce, and make it clear for everyone: this way, users will avoid bruteforce finding it unuseful (and save a lot of your bandwidth);

- if you are good with programming, you can intentionally leave some bugs in the system so that users will be able to exploit them enabling new features inside your site, such as a secret forum or a list of hidden resources: there's no better incentive for hackers than the possibility of shaping a system according to their own will!

- if you receive a message from a user warning you about a vulnerability, consider it as a great privilege: instead of defacing you, they have sent you a constructive contribute! Try to learn more from that, correct the bug and document everything, so that all the other users will be able to learn something new from your error and from the ability of who discovered it. Finally, challenge everyone to find others: this will make the game even more interesting.

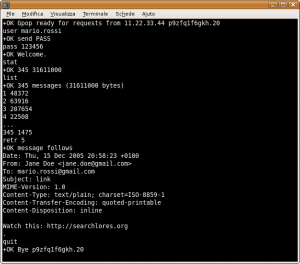

Telnet Email: access your email without a mail client

[This is article number 1 of the new "hacks" series. Read here if you want to know more about this]

In the beginning was the command line. Then, the evolution of software saw more and more complex graphical applications, able to abstract from low-level machine operations and make work much easier for the end user. All of this, of course, had a price: the loss of control. But we are not common users... and if there is one thing we want it is having control over what happens on our computers!

Let's think, for instance, about email: in most of the cases we can access our

mailbox with a browser, however only through an interface which has been already defined by our provider; this interface often contains advertisements and forces us to stay connected while we read our mails. We can configure an email client and make it download our messages from the server, however this choice has its drawbacks too: what should we do, for instance, if the computer we are using is not ours? The solution to this problem is very easy: let's come back to the origins, manually executing all the operations that an email client automatically performs whenever it downloads our emails from a server. The only tool we need is available on any computer: its name is telnet and it can be called from the command line (that is from Windows "command prompt" or from MacOSX and Linux Terminal). The data we need to know in advance are just the address and the port of our mail server, which are usually specified by our providers within the howtos for mail client configuration, together with our account's login and password.

Mail servers

Mail servers available on the Internet usually belong to one of two different

categories: outgoing or incoming mail. The former ones usually use SMTP (Simple Mail Transfer Protocol) and are accessed to send messages, while the latter ones use either POP3 (Post Office Protocol 3) or IMAP (Internet Message Access Protocol) and are the ones we will describe more in detail in this article, as they are used to download messages from a mailbox to our computer. Most of the times mail server addresses are built starting from your email address domain name and adding a prefix witch matches the protocol used: for instance, for gmail.com emails the outgoing server is called smtp.gmail.com, while the incoming ones are called pop.gmail.com and imap.gmail.com. A last parameter you have to specify to connect to a server is the port: the default values are 110 for POP3 and 143 for IMAP.

Let's keep things private

Before starting, however, you have to be aware about the following: every time you connect to one of the ports we just described, your data will be transferred in clear. This means that anyone would be able to read what you write just by sniffing the packets that are sent over the network. Luckily, some mail servers also accept encrypted connections (see below): in this case, the default ports are 995 for POP3 and 993 for IMAP. Finally, it is good to remember that all the information you send, independently from the fact that you are using an encrypted connection or not, are shown on the screen, so you'll better check that nobody's near you before entering your password...

NOTE: for those mail servers which, like gmail, require an encrypted connection, you can't just have a simple telnet connection. However, you can use the openssl program (available here). The syntax to connect is the following:

openssl s_client -connect <server name>:<port>

For instance:

openssl s_client -connect pop.gmail.com:995

Connect to the server

After you have chosen the connection type and verified you have the correct data, you can finally connect to your mail server using telnet. To do this you first have to open a terminal: from the Start menu in windows, select the Run option, write the "cmd" command, then press Enter; if you have a Mac, choose the Terminal application from the folder called Application/Utilities; in linux you can find it in the Utilities or Tools section (or you can have a full screen terminal by pressing the keys CTRL+ALT+F1). Once the terminal is open, you can connect to the server by writing

telnet <server address> <port>

For instance:

telnet pop.mydomain.com 110

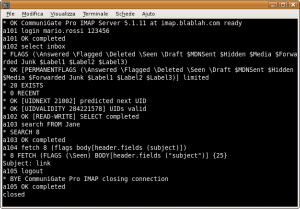

If the connection is opened correctly the server replies saying it is ready to

receive commands. Images show the main commands you can run on a POP server and below you can find different examples of connections both to POP and IMAP servers. IMAP servers are a little more complex than POP (for instance, every command has to be preceded by a tag containing an incremental value), however it is much more powerful at the same time, as it allows you to manage your mail in folders and mark messages with specific flags. All you have to do now is experiment with this new tool, maybe a little spartan but with no restrictions imposed by proprietary interfaces, and find new ways to manage your email with telnet.

POP3

The POP3 protocol is quite easy and follows the specs which appear inside RFC1939. Here's a list of the main commands:

- USER <username>: specifies your email account's login

- PASS <password>: specifies (in clear) your email account's password

- STAT: shows the number of messages in the mailbox and the total space they require

- LIST: shows a list of messages with their size

- RETR <message id>: shows the message identified by "id"

- TOP <message id> <n>: shows the first <n> rows of the message

- DELE <message id>: deletes the specified message from the server

- RSET: resets all the DELE operations previously performed (within the same session)

- QUIT: quits the POP3 session and disconnects from the server

IMAP

The IMAP protocol follows the specs of RFC3501 and is far more complex than POP3. For this reason, instead of a list of commands we just show a session example, suggesting you to check the RFC or to search for "IMAP and TELNET" to get more details.

01 LOGIN <login> <pass> authenticates on the server 02 LIST "" * shows the list of available folders 03 SELECT INBOX opens the INBOX folder 04 STATUS INBOX (MESSAGES) shows the number of messages in the current folder 05 FETCH <messagenum> FULL downloads the header of the specified message 06 FETCH <messagenum> BODY[text] downloads the body of the specified message 07 LOGOUT disconnects from the server