Perl Hacks: infogain-based term cloud

This is one of the very first tools I have developed in my first year of post-doc. It took a while to publish it as it was not clear what I could disclose of the project that was funding me. Now the project has ended and, after more than one year, the funding company still has not funded anything ;-) Moreover, this is something very far from the final results that we obtained, so I guess I could finally share it.

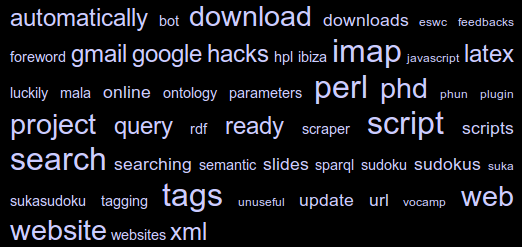

Rather than a real research tool, this is more like a quick hack that I built up to show how we could use Information Gain to extract "interesting" words from a collection of documents, and term frequencies to show them in a cloud. I have called it a "term cloud" because, even if it looks like the well-known tag clouds, it is not built up with tags but with terms that are automatically extracted from a corpus of documents.

The tool is called "rain" as it is based on rainbow, an application built on top of the "bow" libraries that performs statistical text classification. The basic idea is that we use two sets of documents: the training set is used to instruct the system about what can be considered "common knowledge"; the test set is used to provide documents about the specific domain of knowledge we are interested in. The result is that the words which more likely discriminate the test set from the training one are selected, and their occurrences are used to build the final cloud.

All is done within a pretty small perl script, which does not do much more than calling the rainbow tool (which has to be installed first!) and using its output to perform calculation and build an HTML page with the generated cloud. You can download the script from these two locations:

- here you can find a barebone version of the script, which only contains the script and few test documents collections. The tool works (that is it does not return errors) even without training data, but will not perform fine unless it is properly trained. You will also have to download and install rainbow before you can use it;

- here you can find an "all inclusive" version of the script. It is much bigger but it provides: the ".deb" file to install rainbow (don't be frightened by its release date, it still works with Ubuntu Maverick!), and a training set built by collecting all the posts from the "20_newsgroups" data set.

How can I run the rain tool?

Supposing you are using the "all inclusive" version, that is you already have your training data and rainbow installed, running the tool is easy as writing

perl rain.pl <path_to_test_dir>

The script parameters can be modified within the script itself (see the following excerpt from the script source):

my $TERMS = 50; # size of the pool (top words by infogain)

my $TAGS = 50; # final number of tags (top words by occurrence)

my $SIZENUM = 6; # number of size classes to be used in the HTML document,

# represented as different font sizes in the CSS

my $FIREFOX_BIN = '/usr/bin/firefox'; # path to browser binary

# (if present, firefox will be called to open the HTML file)

my $RAINBOW_BIN = '/usr/bin/rainbow'; # path to rainbow binary

my $DIR_MODEL = './results/model'; # used internally by rainbow

my $DIR_DATA = './train'; # path to training dir

my $DIR_TEST = $ARGV[0]; # path to test dir

my $FILE_STOPLIST = './stopwords.txt'; # stopwords file

my $FILE_TMPDATA = './results/data.txt'; # file where data generated

# by rainbow will be dumped

my $HTML_TEMPLATE = './template.html'; # template file used to generate the

# tag-cloud html page

my $HTML_OUTPUT = './results/output.html'; # final html page

As you can see, there are quite a lot of parameters but the script can also be run just out of the box: for instance, if you type

perl rain.pl ./test/folksonomies

you will see the cloud shown in Figure 1. And now, here is another term cloud built using my own blog posts as a text corpus:

January 18th, 2011 - 15:59

Hi,

I’m currently trying to employ rain for the extraction of concepts from a curriculum. However, it doesn’t seem to like my new stopword list (in german)…it’s sorted alphabetically, one word per line and stored as plain/text (non-unicode).

Is there anything else I should be aware of?

Trying to run it gives a failed assertion in bow_strtrie_add.

My training data with the original stopword file works fine, however.

FYI libbow is available from the Ubuntu repositories for 64-bit architecture…seems to work ok.

Thanks for any help,

Andy

January 19th, 2011 - 17:29

Hi Andy,

hmmm… I remember I had some similar problems (that is, errors returned by rainbow itself and not trapped by my perl script) with the stopwords file, and most of them were bound to non-alphabetic characters like quotes (i.e. in “I’ve”, “we’ll”, etc.), dashes (i.e. like “et-al”) and tabs (I forgot one in a line). I guess the tool does not accept any character which is not alphanumeric. The weird thing is that on the rainbow page (http://www.cs.cmu.edu/~mccallum/bow/rainbow/) it seems like the tool is supporting the SMART stoplist, which (http://jmlr.csail.mit.edu/papers/volume5/lewis04a/a11-smart-stop-list/english.stop) also includes stopwords with apices… mah!

Does removing apices and such drastically affect your work? If so, you might like to know I am about to release (I hope in a short time) a more advanced Java tool which directly relies on Lucene for tokenization and should be more stable… at least for what concerns this aspect ;-)

Cheers,

da