The cookie jar metaphor

Searching for "cookie jar metaphor" on Google you will find the well known "hand in the cookie jar" one, so coming up with a new metaphor that relies on the same concept might sound pretty ambitious... However, it came to my mind like this and I do not want to change it, no matter if it will be drowned in an ocean of unrelated information.

The thing is: suppose I have been using a cookie jar to save my money for a while and now I have collected a rather good amount of it. One day I decide I want to give my money to those people who really need it and start sharing the contents of my cookie jar, so I open it, I put it on the table in my kitchen, I put a sign next to it saying "Free money, take as much as you need", then I leave my door unlocked so anyone could enter and get that money.

Would that money be really shared with others? Would that be shared with others who need it?

I have been writing free code basically since I write code. Every time I released a new piece of software (well, usually not amazing apps) I uploaded it somewhere and put a link on my website. Well, for a while my website has been a hacker's challenge closed to search engines and most of Internet users :-), but apart from that I have tried nevertheless to share my discoveries in one way or another. When I started to do research, I tried to do the same with my discoveries, tools, and datasets. I actually had a chance to share some of my stuff with some people and every time it has been a win-win situation, as I learned from others at least as much as they did from me.

What I have learned, however, is that most of the time I have just opened my cookie jar on the table, not being able to reach many people outside, and most of all not being able to reach the ones who might have been more interested in what I was doing. I always thought that pushing too much my discoveries and accomplishments was more like showing off rather than sharing what I did, and supposed that if anyone was interested in the same things then they would have probably found my work anyway.

Well, I guess I was SO wrong about this. Telling people around that I have that open cookie jar is my duty if what I want to do is share the money I have collected in it. So I think that this kind of communication becomes part of free software as much as writing code, and part of research as much as studying others' work and writing papers about yours.

So now here's the problem: how to share my stuff more effectively? I guess being more active within specific communities (those who might get the best out of what I share), using other channels to communicate (i.e. I sometimes twit about my blog updates, I guess I'll do that more often - do you have #hashtags to suggest?), but most of all finding the time to do all of this.

Are you doing this? If so, do you have any comments or suggestions? Any feedback is welcome, either here or by any other electronic or real life means ;-)

New (old) paper: “On the use of correspondence analysis to learn seed ontologies from text”

Here is another work done in the last year(s), and here is its story. In January, 2009, as soon as I finished with my PhD, I've been put in contact with a company searching for people to implement Fionn Murtagh's Correspondence Analysis methodology for the automatic extraction of ontologies from text. After clarifying my position about it (that is, that what was extracted were just taxonomies and that I thought that the process should have become semi-automatic), I started a 10 months project in my university, officially funded by that company. I say "officially" because, while I regularly received my paycheck each month from the university, the company does not seem to have payed yet, after almost two years from the beginning of the project. Well, I guess they are probably just late and I am sure they will eventually do that, right?

By the way, the project was interesting even if it started as just the development of someone else's approach. The good point is that it provided us some interesting insights about how ontology extraction from text works in practice, what are the real world problems you have to face and how to address them. And the best thing is that, after the end of the project, we found we had enough enthusiasm (and most important a Master student, Fabio Marfia... thanks! ;)) to continue that.

Fabio has done a great work, taking the tool I had developed, expanding it with new functionalities, and testing them with real world examples. The outcome of our work, together with Fabio's graduation of course (you can find material about his thesis here), is the paper "On the use of correspondence analysis to learn seed ontologies from text" we wrote together with Matteo Matteucci. You can find the paper here, while here you can download its poster.

The work is not finished yet: there are still some aspects of the project that we would like to delve deeper into and there are still things we have not shared about it. It is just a matter of time, however, so stay tuned ;-)

Perl Hacks: infogain-based term cloud

This is one of the very first tools I have developed in my first year of post-doc. It took a while to publish it as it was not clear what I could disclose of the project that was funding me. Now the project has ended and, after more than one year, the funding company still has not funded anything ;-) Moreover, this is something very far from the final results that we obtained, so I guess I could finally share it.

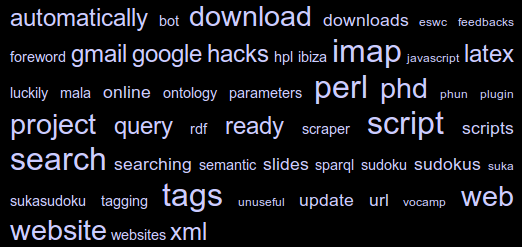

Rather than a real research tool, this is more like a quick hack that I built up to show how we could use Information Gain to extract "interesting" words from a collection of documents, and term frequencies to show them in a cloud. I have called it a "term cloud" because, even if it looks like the well-known tag clouds, it is not built up with tags but with terms that are automatically extracted from a corpus of documents.

The tool is called "rain" as it is based on rainbow, an application built on top of the "bow" libraries that performs statistical text classification. The basic idea is that we use two sets of documents: the training set is used to instruct the system about what can be considered "common knowledge"; the test set is used to provide documents about the specific domain of knowledge we are interested in. The result is that the words which more likely discriminate the test set from the training one are selected, and their occurrences are used to build the final cloud.

All is done within a pretty small perl script, which does not do much more than calling the rainbow tool (which has to be installed first!) and using its output to perform calculation and build an HTML page with the generated cloud. You can download the script from these two locations:

- here you can find a barebone version of the script, which only contains the script and few test documents collections. The tool works (that is it does not return errors) even without training data, but will not perform fine unless it is properly trained. You will also have to download and install rainbow before you can use it;

- here you can find an "all inclusive" version of the script. It is much bigger but it provides: the ".deb" file to install rainbow (don't be frightened by its release date, it still works with Ubuntu Maverick!), and a training set built by collecting all the posts from the "20_newsgroups" data set.

How can I run the rain tool?

Supposing you are using the "all inclusive" version, that is you already have your training data and rainbow installed, running the tool is easy as writing

perl rain.pl <path_to_test_dir>

The script parameters can be modified within the script itself (see the following excerpt from the script source):

my $TERMS = 50; # size of the pool (top words by infogain)

my $TAGS = 50; # final number of tags (top words by occurrence)

my $SIZENUM = 6; # number of size classes to be used in the HTML document,

# represented as different font sizes in the CSS

my $FIREFOX_BIN = '/usr/bin/firefox'; # path to browser binary

# (if present, firefox will be called to open the HTML file)

my $RAINBOW_BIN = '/usr/bin/rainbow'; # path to rainbow binary

my $DIR_MODEL = './results/model'; # used internally by rainbow

my $DIR_DATA = './train'; # path to training dir

my $DIR_TEST = $ARGV[0]; # path to test dir

my $FILE_STOPLIST = './stopwords.txt'; # stopwords file

my $FILE_TMPDATA = './results/data.txt'; # file where data generated

# by rainbow will be dumped

my $HTML_TEMPLATE = './template.html'; # template file used to generate the

# tag-cloud html page

my $HTML_OUTPUT = './results/output.html'; # final html page

As you can see, there are quite a lot of parameters but the script can also be run just out of the box: for instance, if you type

perl rain.pl ./test/folksonomies

you will see the cloud shown in Figure 1. And now, here is another term cloud built using my own blog posts as a text corpus:

New (old) paper: “GVIS: A framework for graphical mashups of heterogeneous sources to support data interpretation”

I know this is not a recent paper (it has been presented in May), but I am slowly doing a recap of what I have done during the last year and this is one of the updates you might have missed. "GVIS: A framework for graphical mashups of heterogeneous sources to support data interpretation", by Luca Mazzola, me, and Riccardo Mazza, is the first paper (and definitely not the last, as I have already written another!) with Luca, and it has been a great fun for me. We had a chance to merge our works (his modular architecture and my semantic models and tools) to obtain something new, that is the visualization of a user profile based on her browsing history and tags retrieved from Delicious.

Curious about it? You can find the document here (local copy: here) and the slides of Luca's presentation here.

One year of updates

If you know me, you also probably know I sometimes disappear for months and then come back saying "I'm still alive, these are the latest news, from now on you'll receive more regular updates".

Well, this time it's different: I'm still alive, I've got (even too) many news to tell you, but I cannot assure you there will be regular updates. This is due to one of the most important pieces of news I've got to tell you.

Almost one year ago (to be more precise, 10 months and one day ago) I have become a father. This is the best thing that ever happened into my life, and the greatest thing is that it is going to last for a while ;-) Of course life has changed, sometimes becoming better and sometimes worse... but after I got accustomed to it I must say it's ok now ;-) Of course I think you NEVER get accustomed to it, but at least you realize it and start to live with it: it is something like knowing you do not know, you just get accustomed to the fact there's no way to get accustomed to almost anything anymore, as things will always, continuously change. Which is always good news for me.

Ok, nothing really technical here, but I hope that the few readers I have will be happy to know the good news. Other good ones (if you are more interested in the technical updates) are that I haven't been still and I actually have some things to tell you. Of course these require time, so if you are not patient enough to wait for my next update just send me an email (or call me, or invite me for a talk) and we can talk about this in person.

Take care :-)