Who’s this guy?

Well, I'm not exactly interested in him, but...

http://del.icio.us/brentparker

Haven't you noticed anything strange inside his del.icio.us page?

No? Well, compare it with another user (statistically, you should find a "normal" one more easily).

Is he alone, or are there other "bugged users" inside del.icio.us?

Ok, ok, I'll stop reading my scraper's log ;-)

Perl Hacks: del.icio.us scraper

At last, I built it: a working, quite stable del.icio.us scraper. I needed a dataset big enough to make some experiments on it (for a research project I'll talk you about sooner or later), so I had to create something which would not only allow me to download my stuff (like with del.icio.us API), but also data from other users connected with me.

Even if it's a first release, I have tested the script quite much in these days and it's stable enough to let you backup your data and get some more if you're doing research on this topic (BTW, if so let me know, we might exchange some ideas ;-) Here are some of its advantages:

- it just needs a list of users to start and then downloads all their bookmarks

- it saves data inside a DB, so you can query them, export them in any format, do some data mining and so on

- it runs politely, with a 5 seconds sleep between page downloads, so to avoid bombing del.icio.us website with requests

- it supports the use of a proxy

- it's very tweakable: most of its parameters can be easily changed

- it's almost ready for a distributed version (that is, it supports table locking so you can run many clients which connect to a centralized database)

Of course, it's far from being perfect:

- code is still quite messy: probably a more modular version would be easier to update (perl coders willing to give a hand are welcome, of course!)

- I haven't tried the "distributed version" yet, so it just works in theory ;-)

- it's sloooow, especially compared to the huge size of del.icio.us: at the beginning of this month, they said they had about 1.5 million users, and I don't believe that a single client will be able to get much more than few thousand users per day (but do you need more?)

- the way it is designed, the database grows quite quickly and interesting queries won't be very fast if you download many users (DB specialists willing to give a hand are welcome, of course!)

- the program misses a function to harvest users, so you have to provide the list of users you want to download manually. Actually, I made mine with another scraper but I did not want to provide, well, both the gun and the bullets to everyone. I'm sure someone will have something to say about this, but hey, it takes you less time to write your ad-hoc scraper than to add an angry comment here, so don't ask me to give you mine

That's all. You'll find the source code here, have phun ;)

Perl Hacks: K-Means

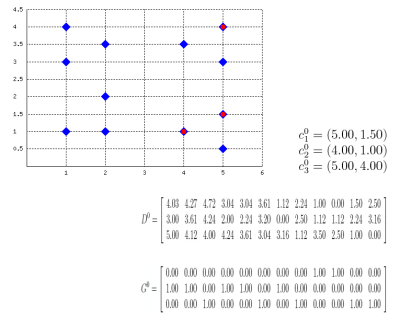

Well, this probably isn't a best-selling app, but it might be useful for some who, like me, have to explain how k-means clustering algorithm works or to prepare exercises about it. Also, this works as an example on how to embed latex formulas inside images with Perl: the script actually draws the plane (with points and centroids) inside an image, then generates latex formulas which describe the algorithm evolution, compiles them into images with tex2im and embeds them inside the main picture. The final output is made of many different pictures, one for each step of the algorithm, similar to the following one:

Of course, the script is still far from perfect but (again, of course) the source code is provided so you can change/correct/ameliorate it. To run it you will also need text2im, which is downloadable here (a big THANK YOU to Andreas Reigber who created this nice shell script), and of course latex stuff.

Perl Hacks: more sudokus

Hi all,

incredibly, I got messages asking for more sudokus: aren't you satisfied with the ones harvested from Daily Sudoku? Well... once a script like SukaSudoku is ready, the only work that has to be done is to create a new wrapper for another website! Let's take, for instance, Number Logic site: here's a new perl script which inherits most of its code from SukaSudoku and extracts information from Number Logic. Just copy it over the old "suka.pl" file and double the number of sudokus to play with.

Perl Hacks: quotiki bot

I like quotiki. I like fortunes. Why not make a bot which downloads a random quote from quotiki and shows it on my computer? Here's a little perl bot which does exactly this. It's very short and easy, so I guess this could be a good starting point for beginners.

And, by the way:

"Age is an issue of mind over matter. If you don't mind, it doesn't matter."

-- Mark Twain

;)

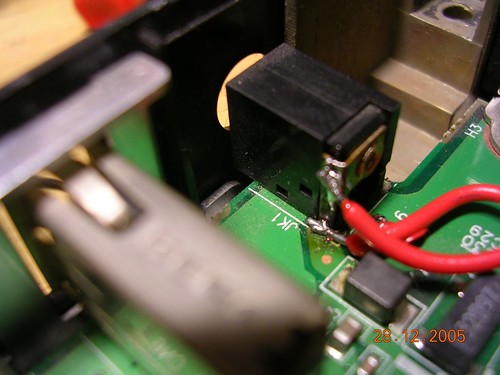

Travelmate 514TXV repair

After about one year, I decided to put online some photos which show how I repaired my old travelmate laptop, hoping they will be useful for someone who had the same problem (or who just wants to see how a naked laptop is ;)).

The problem I had was a power connector which, after some years of usage, started to slightly move from its position until it broke the welding with the motherboard. As the contact with the mobo became dangling, it started to charge/discharge my battery continuously (in fact, it lives for about 5 minutes now) and then to let the computer switch off when batteries were too low.

Fortunately, no component was really broken so it was quite easy to repair: the hardest part was to realize how to open the notebook and reach the part I needed to solder. Here the whole process is described through photos.

Perl Hacks: Google search

I'm at page 14 inside Google results for the search string "perl hacks". Well, at least in google.it which is where I'm automatically redirected...

Who bothers? Well, not even me, I was just curious about it. How did I do it? Well, of course automatically with a perl bot!

Here it is. You call it passing two parameters from the command line: the first one is the search string (ie. "\"perl hacks\""), the second one is (part of) the title of the page (ie. "mala::home"). Then the program connects to Google, searches for the search string and then finds at which page the second string occurs.

Probably someone noticed the filename ends with "02". Is there a "01" out there too? Of course there is: it's a very similar version which doesn't search only inside titles but also in the whole snippet of text related to the search result. Less precise, but a little more flexible.

Perl Hacks: Googleicious!

At last, here's my little googleicious, my first attempt at merging google results with del.icio.us tags. I did it some months ago to see if I could automatically retrieve information from del.icio.us. Well... it's possible :)

What does this script do? It takes a word (or a collection of words) from the command line and searches for it on google; then it takes the search results, extracts URLs from it and searches for them within del.icio.us, showing the tags that have been used to classify them. Even if the script is quite simple, there are many details you can infer from its output:

- you can see how many top results in Google are actually interesting for people

- you can use tags to give meaning to some obscure words (try for instance ESWC and you'll see it's a conference about semantic web, or screener and you'll learn it's not a term used only by movie rippers)

- starting from the most used tags returned by del.icio.us, you can search for similar URLs which haven't been returned by Google

Now some notes:

- I believe this is very near to a limit both Google and del.icio.us don't want you to cross, so please behave politely and avoid bombing them with requests

- For the very same reason, this version is quite limited (it just takes the first page of results) but lacks some more controls I put in later versions such as sleep time to avoid request bursts. So, again, be polite please ^__^

- I know that probably one zillion people have already used the term googleicious and I don't want to be considered as its inventor. I just invented the script, so if you don't like the name just call it foobar or however you like: it will run anyway.

Ah, yes, the code is here!

Bibsonomy

As you probably have noticed yet just by giving a look at my About page, I'm using Bibsonomy as an online tool to manage bibliographies for my (erm... future?) papers. If you use BibTeX (or EndNote) for your bibliographies this is quite a useful tool. With it you can:

- Save references to the papers/websites you have read all in one place

- Save all the bibliography-related information in a standard, widely used format

- Tag publications: this doesn't only mean you can easily find them later, but also that you can share them with others, find new ones, find new people interested in the same stuff and so on (soon: an article about tags and folksonomies, I promise)

- Easily export publication lists in BibTeX format, ready to include in your papers

This export feature is particularly useful: you can manage your bibliographies directly on the website and then export them (or a part of them) in few clicks. Even better: you can do that even without clicking at all! Try this:

wget "http://www.bibsonomy.org/bib/user/deynard?items=100" -O bibs.bib

You've just downloaded my whole collection inside one "bibs.bib" file, ready to be included in your LaTeX document. The items parameter allows you to specify how many publications you want to download: yes, it sounds silly (why would you want only the first n items?)

Of course, if you like you can just download items tagged as "tagging" with this URL:

http://www.bibsonomy.org/bib/user/deynard/tagging

You can join tags like in this URL:

http://www.bibsonomy.org/bib/user/deynard/tagging+ontology

And of course, you can substitute wget with lynx:

lynx -dont_wrap_pre -dump "http://www.bibsonomy.org/bib/user/deynard?items=100" > bibs2.bib

Well, that's all for now. If you happen to find any interesting hacks lemme know ;)

Perl Hacks: SukaSudoku!

Hi everybody :)

As I decided to post one "perl hack" each week, and as after two posts I lost my inspiration, I've decided to recycle an old project which hasn't been officially published yet. Its name is sukasudoku, and as the name implies it sucks sudokus from a website (http://www.dailysudoku.com) and saves them on your disk.

BUT...

Hah! It doesn't just "save them on your disk", but creates a nice book in PDF with all the sudokus it has downloaded. Then you can print the book, bind it and either play your beloved sudokus wherever you want or give it to your beloved ones as a beautiful, zero-cost Xmas gift.

Of course, the version I publish here is limited just to avoid having every script kiddie download the full archive without efforts. But you have the source, and you have the knowledge. So what's the problem?

The source file is here for online viewing, but to have a working version you should download the full package. In the package you will find:

- book and clean, two shell scripts to create the book from the LaTeX source (note: of course, you need latex installed in your system to make it work!) and to remove all the junk files from the current dir

- sudoku.sty, the LaTeX package used to create sudoku grids

- suka.pl, the main script

- tpl_sudoku.tex, the template that will be used to create the latex document containing sudokus