Perl Hacks: automatically get info about a movie from IMDB

Ok, I know the title sounds like "hey, I found an easy API and here's how to use it"... well, the post is not much more advanced than this but 1) it does not talk about an API but rather about a Perl package and 2) it is not so trivial, as it does not deal with clean, precise movie titles, but rather with another kind of use case you might happen to witness quite frequently in real life.

Yes, we are talking about pirate video... and I hope that writing this here will bring some kids to my blog searching for something they won't find. Guys, go away and learn to search stuff the proper way... or stay here and learn something useful ;-)

So, the real life use case: you have a directory containing videos you have downloaded or you have been lent by a friend (just in case you don't want to admit you have downloaded them ;-). All the titles are like

The.Best.Movie.Ever(2011).SiLeNT.[dvdrip].md.xvid.whatever_else.avi

and yes, you can easily understand what the movie title is and automatically remove all the other crap, but how can a software can do this? But first of all, why should a software do this?

Well, suppose you are not watching movies from your computer, but rather from a media center. It would be nice if, instead of having a list of ugly filenames, you were presented a list of film posters, with their correct titles and additional information such as length, actors, etc. Moreover, if the information you have about your video files is structured you can easily manage your movie collection, automatically categorizing them by genre, director, year, or whatever you prefer, thus finding them more easily. Ah, and of course you might just be interested in a tool that automatically renames files so that they comply with your naming standards (I know, nerds' life is a real pain...).

What we need, then, is a tool that links a filename (that we suppose contains at least the movie title) to, basically, an id (like the IMDB movie id) that uniquely identifies that movie. Then, from IMDB itself we can get a lot of additional information. The steps we have to perform are two:

- convert an almost unreadable filename in something similar to the original movie title

- search IMDB for that movie title and get information about it

Of course, I expect that not all the results are going to be perfect: filenames might really be too messy or there could be different movies with the same name. However, I think that having a tool that gives you a big help in cleaning your movie collection might be fine even if it's not perfect.

Let's start with point 1: filename cleaning. First of all, you need to have an idea about how files are actually named. To find enough examples, I just did a quick search on google for divx dvdscr dvdrip xvid filetype:txt: it is surprising how many file lists you can find with such a small effort :) Here are some examples of movie titles:

American.Beauty.1999.AC3.iNTERNAL.DVDRip.XviD-xCZ Dodgeball.A.True.Underdog.Story.SVCD.TELESYNC-VideoCD Eternal.Sunshine.Of.The.Spotless.Mind.REPACK.DVDSCr.XViD-DvP

and so on. All the additional keywords that you find together with the movie's title are actually useful ones, as they detail the quality of the video (i.e. svcd vs dvdrip or screener), the group that created it, and so on. However we currently don't need them so we have to find a way to remove them. Moreover, a lot of junk such as punctuation and parentheses are added to most of the file names, so it is very difficult to understand when the movie title ends and the rest begins.

To clean this mess, I have first filtered everything so that only alphanumeric characters are kept and everything else is considered as a separator (this became the equivalent of the tokenizing phase of a document indexer). Then I built a list of stopwords that need to be automatically removed from the filename. The list has been built from the very same file I got the titles from (you can find it here or, if the link is dead, download the file ALL_MOVIES here). Just by counting the occurences of each word with a simple perl script, I came out with a huge list of terms I could easily remove: to give you an idea of it, here's an excerpt of all the terms that occur at least 10 times in that file

129 -> DVDRip 119 -> XviD 118 -> The 73 -> XViD 35 -> DVDRiP 34 -> 2003 33 -> REPACK DVDSCR 30 -> SVCD the 24 -> 2 22 -> xvid 20 -> LiMiTED 19 -> DVL dvdrip 18 -> of A DMT 17 -> LIMITED Of 16 -> iNTERNAL SCREENER 14 -> ALLiANCE 13 -> DiAMOND 11 -> TELESYNC AC3 DVDrip 10 -> INTERNAL and VideoCD in

As you can see, most of the most frequent terms are actually stopwords that can be safely removed without worrying too much about the original titles. I started with this list and then manually added new keywords I found after the first tests. The results are in my blacklist file. Finally, I decided to apply another heuristic to clean filenames: every time a sequence of token ends with a date (starting with 19- or 20-) there are chances that it is just the movie date and not part of the title; thus, this date has to be removed too. Of course, if the title is made up of only that date (i.e. 2010) it is kept. Summarizing, the Perl code used to clean filenames is just the following one:

sub cleanTitle{

my ($dirtyTitle, $blacklist) = @_;

my @cleanTitleArray;

# HEURISTIC #1: everything which is not alphanumeric is a separator;

# HEURISTIC #2: if an extracted word belongs to the blacklist then ignore it;

while ($dirtyTitle =~ /([a-zA-Z0-9]+)/g){

my $word = lc($1); # blacklist is lowercase

if (!defined $$blacklist{$word}){

push @cleanTitleArray, $word;

}

}

# HEURISTIC #3: often movies have a date (year) after the title, remove

# that (if it is not the title of the movie itself!);

my $lastWord = pop(@cleanTitleArray);

my $arraySize = @cleanTitleArray;

if ($lastWord !~ /(19\d\d)|(20\d\d)/ || !$arraySize){

push @cleanTitleArray, $lastWord;

}

return join (" ", @cleanTitleArray);

}

Step 2, instead, consists in sending the query to IMDB and getting information back. Fortunately this is a pretty trivial step, given we have IMDB::Film available! The only thing I had to modify in the package was the function that returns alternative movie titles: it was not working and I also wanted to customize it to return a hash ("language"=>"alt title") instead of an array. I started from an existing patch to the (currently) latest version of IMDB::Film that you can find here, and created my own Film.pm patch (note that the patch has to be applied to the original Film.pm and not to the patched version described in the bug page).

Access to IMDB methods is already well described in the package page. The only addition I made was to my script was the getAlternativeTitle function, which gets all the alternative titles for a movie and returns, in order of priority, the Italian one if it exists, otherwise the international/English one. This is the code:

sub getAlternativeTitle{

my $imdb = shift;

my $altTitle = "";

my $aka = $imdb->also_known_as();

foreach $key (keys %$aka){

# NOTE: currently the default is to return the Italian title,

# otherwise rollback to the first occurrence of International

# or English. Change below here if you want to customize it!

if ($key =~ /^Ital/){

$altTitle = $$aka{$key};

last;

}elsif ($key =~ /^(International|English)/){

$altTitle = $$aka{$key};

}

}

return $altTitle;

}

So, that's basically all. The script has been built to accept only one parameter on the command line. For testing, if the parameter is recognized as an existing filename, the file is opened and parsed for a list of movie titles; otherwise, the string is considered as a movie title to clean and search.

Testing has been very useful to understand if the tool was working well or not. The precision is pretty good, as you can see from the attached file output.txt (if anyone wants to calculate the percentage of right movies... Well, you are welcome!), however I suspect it is kind of biased towards this list, as it was the same one I took the stopwords from. If you have time to waste and want to work on a better, more complete stopwords list I think you have already understood how easy it is to create one... please make it available somewhere! :-)

The full package, with the original script (mt.pl) and its data files is available here. Enjoy!

I’m Posting every week in 2011!

I’ve decided I want to blog more. Rather than just thinking about doing it, I’m starting right now. I will be posting on this blog once a week for all of 2011.

I know it won’t be easy, but it might be fun, inspiring, awesome and wonderful. Therefore I’m promising to make use of The DailyPost, and the community of other bloggers with similiar goals, to help me along the way, including asking for help when I need it and encouraging others when I can.

If you already read my blog, I hope you’ll encourage me with comments and likes, and good will along the way.

Signed,

Davide

p.s. Well, copying and pasting this initial post was very easy, let's see how it works with the following ones ;-)

The cookie jar metaphor

Searching for "cookie jar metaphor" on Google you will find the well known "hand in the cookie jar" one, so coming up with a new metaphor that relies on the same concept might sound pretty ambitious... However, it came to my mind like this and I do not want to change it, no matter if it will be drowned in an ocean of unrelated information.

The thing is: suppose I have been using a cookie jar to save my money for a while and now I have collected a rather good amount of it. One day I decide I want to give my money to those people who really need it and start sharing the contents of my cookie jar, so I open it, I put it on the table in my kitchen, I put a sign next to it saying "Free money, take as much as you need", then I leave my door unlocked so anyone could enter and get that money.

Would that money be really shared with others? Would that be shared with others who need it?

I have been writing free code basically since I write code. Every time I released a new piece of software (well, usually not amazing apps) I uploaded it somewhere and put a link on my website. Well, for a while my website has been a hacker's challenge closed to search engines and most of Internet users :-), but apart from that I have tried nevertheless to share my discoveries in one way or another. When I started to do research, I tried to do the same with my discoveries, tools, and datasets. I actually had a chance to share some of my stuff with some people and every time it has been a win-win situation, as I learned from others at least as much as they did from me.

What I have learned, however, is that most of the time I have just opened my cookie jar on the table, not being able to reach many people outside, and most of all not being able to reach the ones who might have been more interested in what I was doing. I always thought that pushing too much my discoveries and accomplishments was more like showing off rather than sharing what I did, and supposed that if anyone was interested in the same things then they would have probably found my work anyway.

Well, I guess I was SO wrong about this. Telling people around that I have that open cookie jar is my duty if what I want to do is share the money I have collected in it. So I think that this kind of communication becomes part of free software as much as writing code, and part of research as much as studying others' work and writing papers about yours.

So now here's the problem: how to share my stuff more effectively? I guess being more active within specific communities (those who might get the best out of what I share), using other channels to communicate (i.e. I sometimes twit about my blog updates, I guess I'll do that more often - do you have #hashtags to suggest?), but most of all finding the time to do all of this.

Are you doing this? If so, do you have any comments or suggestions? Any feedback is welcome, either here or by any other electronic or real life means ;-)

New (old) paper: “On the use of correspondence analysis to learn seed ontologies from text”

Here is another work done in the last year(s), and here is its story. In January, 2009, as soon as I finished with my PhD, I've been put in contact with a company searching for people to implement Fionn Murtagh's Correspondence Analysis methodology for the automatic extraction of ontologies from text. After clarifying my position about it (that is, that what was extracted were just taxonomies and that I thought that the process should have become semi-automatic), I started a 10 months project in my university, officially funded by that company. I say "officially" because, while I regularly received my paycheck each month from the university, the company does not seem to have payed yet, after almost two years from the beginning of the project. Well, I guess they are probably just late and I am sure they will eventually do that, right?

By the way, the project was interesting even if it started as just the development of someone else's approach. The good point is that it provided us some interesting insights about how ontology extraction from text works in practice, what are the real world problems you have to face and how to address them. And the best thing is that, after the end of the project, we found we had enough enthusiasm (and most important a Master student, Fabio Marfia... thanks! ;)) to continue that.

Fabio has done a great work, taking the tool I had developed, expanding it with new functionalities, and testing them with real world examples. The outcome of our work, together with Fabio's graduation of course (you can find material about his thesis here), is the paper "On the use of correspondence analysis to learn seed ontologies from text" we wrote together with Matteo Matteucci. You can find the paper here, while here you can download its poster.

The work is not finished yet: there are still some aspects of the project that we would like to delve deeper into and there are still things we have not shared about it. It is just a matter of time, however, so stay tuned ;-)

Perl Hacks: infogain-based term cloud

This is one of the very first tools I have developed in my first year of post-doc. It took a while to publish it as it was not clear what I could disclose of the project that was funding me. Now the project has ended and, after more than one year, the funding company still has not funded anything ;-) Moreover, this is something very far from the final results that we obtained, so I guess I could finally share it.

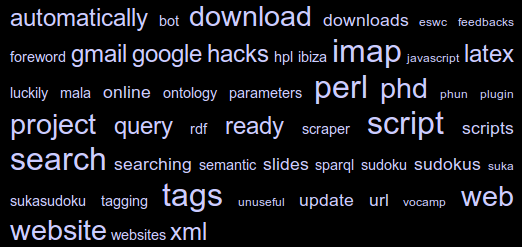

Rather than a real research tool, this is more like a quick hack that I built up to show how we could use Information Gain to extract "interesting" words from a collection of documents, and term frequencies to show them in a cloud. I have called it a "term cloud" because, even if it looks like the well-known tag clouds, it is not built up with tags but with terms that are automatically extracted from a corpus of documents.

The tool is called "rain" as it is based on rainbow, an application built on top of the "bow" libraries that performs statistical text classification. The basic idea is that we use two sets of documents: the training set is used to instruct the system about what can be considered "common knowledge"; the test set is used to provide documents about the specific domain of knowledge we are interested in. The result is that the words which more likely discriminate the test set from the training one are selected, and their occurrences are used to build the final cloud.

All is done within a pretty small perl script, which does not do much more than calling the rainbow tool (which has to be installed first!) and using its output to perform calculation and build an HTML page with the generated cloud. You can download the script from these two locations:

- here you can find a barebone version of the script, which only contains the script and few test documents collections. The tool works (that is it does not return errors) even without training data, but will not perform fine unless it is properly trained. You will also have to download and install rainbow before you can use it;

- here you can find an "all inclusive" version of the script. It is much bigger but it provides: the ".deb" file to install rainbow (don't be frightened by its release date, it still works with Ubuntu Maverick!), and a training set built by collecting all the posts from the "20_newsgroups" data set.

How can I run the rain tool?

Supposing you are using the "all inclusive" version, that is you already have your training data and rainbow installed, running the tool is easy as writing

perl rain.pl <path_to_test_dir>

The script parameters can be modified within the script itself (see the following excerpt from the script source):

my $TERMS = 50; # size of the pool (top words by infogain)

my $TAGS = 50; # final number of tags (top words by occurrence)

my $SIZENUM = 6; # number of size classes to be used in the HTML document,

# represented as different font sizes in the CSS

my $FIREFOX_BIN = '/usr/bin/firefox'; # path to browser binary

# (if present, firefox will be called to open the HTML file)

my $RAINBOW_BIN = '/usr/bin/rainbow'; # path to rainbow binary

my $DIR_MODEL = './results/model'; # used internally by rainbow

my $DIR_DATA = './train'; # path to training dir

my $DIR_TEST = $ARGV[0]; # path to test dir

my $FILE_STOPLIST = './stopwords.txt'; # stopwords file

my $FILE_TMPDATA = './results/data.txt'; # file where data generated

# by rainbow will be dumped

my $HTML_TEMPLATE = './template.html'; # template file used to generate the

# tag-cloud html page

my $HTML_OUTPUT = './results/output.html'; # final html page

As you can see, there are quite a lot of parameters but the script can also be run just out of the box: for instance, if you type

perl rain.pl ./test/folksonomies

you will see the cloud shown in Figure 1. And now, here is another term cloud built using my own blog posts as a text corpus:

New (old) paper: “GVIS: A framework for graphical mashups of heterogeneous sources to support data interpretation”

I know this is not a recent paper (it has been presented in May), but I am slowly doing a recap of what I have done during the last year and this is one of the updates you might have missed. "GVIS: A framework for graphical mashups of heterogeneous sources to support data interpretation", by Luca Mazzola, me, and Riccardo Mazza, is the first paper (and definitely not the last, as I have already written another!) with Luca, and it has been a great fun for me. We had a chance to merge our works (his modular architecture and my semantic models and tools) to obtain something new, that is the visualization of a user profile based on her browsing history and tags retrieved from Delicious.

Curious about it? You can find the document here (local copy: here) and the slides of Luca's presentation here.

One year of updates

If you know me, you also probably know I sometimes disappear for months and then come back saying "I'm still alive, these are the latest news, from now on you'll receive more regular updates".

Well, this time it's different: I'm still alive, I've got (even too) many news to tell you, but I cannot assure you there will be regular updates. This is due to one of the most important pieces of news I've got to tell you.

Almost one year ago (to be more precise, 10 months and one day ago) I have become a father. This is the best thing that ever happened into my life, and the greatest thing is that it is going to last for a while ;-) Of course life has changed, sometimes becoming better and sometimes worse... but after I got accustomed to it I must say it's ok now ;-) Of course I think you NEVER get accustomed to it, but at least you realize it and start to live with it: it is something like knowing you do not know, you just get accustomed to the fact there's no way to get accustomed to almost anything anymore, as things will always, continuously change. Which is always good news for me.

Ok, nothing really technical here, but I hope that the few readers I have will be happy to know the good news. Other good ones (if you are more interested in the technical updates) are that I haven't been still and I actually have some things to tell you. Of course these require time, so if you are not patient enough to wait for my next update just send me an email (or call me, or invite me for a talk) and we can talk about this in person.

Take care :-)

Pirate Radio: Let your voice be heard on the Internet

[Foreword: this is article number 4 of the new "hacks" series. Read here if you want to know more about this.]

"I believe in the bicycle kicks of Bonimba, and in Keith Richard's riffs": for whoever recognizes this movie quote, it will be easy to imagine what you could feel when you speak on the radio, throwing in the air a message that could virtually be heard by anyone. Despite all the new technologies that came after the invention of the radio, its charm has remained the same; moreover, the evolution of the Internet has given us a chance to become deejays, using simple softwares and broadcasting our voice on the Net instead of using radio signals. So, why don't we use these tools to create our "pirate radio", streaming non-copyrighted music and information free from the control of the big media?

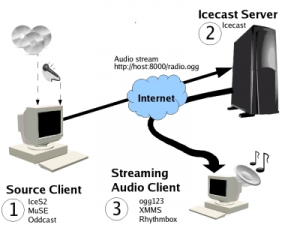

Figure 1: Streaming servers work as antenna towers for the signals you send them, broadcasting your streams to all the connected users.

Technical details

It is not too difficult to understand how a streaming radio works (see Figure 1): everyhing you need is a streaming server, which receives an audio stream from your computer and makes this stream available to all its listeners. This is the most versatile solution and allows anyone, even with a simple 56kbps modem, to broadcast without bandwidth problems. The only limit is that you need a server to send your stream to: fortunately, many servers are available for free and it is pretty easy to find a list of them online (for instance, at http://www.radiotoolbox.com/hosts). A solution which is a little more complex but that allows you to be completely autonomous is to install a streaming audio server on your own machine (if you have one which is always connected to the Internet), so you'll be your own broadcaster. Of course, in this case the main limit is the bandwidth: an ADSL is more than enough if you don't have many listeners, but you might need something more powerful if the number of listeners increases. If creating a Web radio is not a trivial task (we will actually need to setup a streaming server and an application to send the audio stream to it), listening to it is very easy: most of the audio applications currently available (i.e. Media Player, Winamp, XMMS, VLC) are able to connect and play an audio stream given its URL.

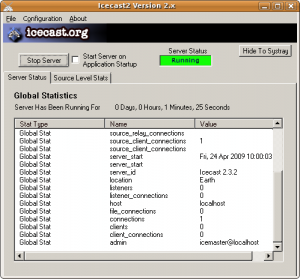

Install the software

The most famous technologies in audio streaming are currently two: SHOUTCast (http://www.shoutcast.com) and Icecast (http://icecast.org). The first one is proprietary and the related software is closed source, even if it is distributed for free; the second one, instead, is based on an opensource server and supports different third-party applications which are also distributed with free licenses. Even if Shoutcast is somehow easier to use (actually, the software is basically integrated within Winamp), our choice has fallen on Icecast as it is far more versatile. The Icecast server is available both for Windows and Linux: the Windows version has a graphical interface while the Linux one runs as a service; in both cases, the configuration can be managed through a text file called icecast.xml. Most of the settings can be left untouched, however it is a good practice to change the default password (which is "hackme") with a custom one inside the authentication section. Once you have installed and configured your server you can run it, and it will start waiting for connections.

The applications you can use to connect to Icecast to broadcast audio are many and different in genre and complexity. Between the ones we tested, we consider the following as the most interesting ones:

- LiveIce is a client that can be used as an XMMS plugin. Its main advantage is the simplicity: in fact, you just need to play mp3 files with XMMS to automatically send them to the Icecast server;

- OddCast, which is basically the equivalent of LiveIce for Winamp;

- DarkIce, a command line tool that directly streams audio from a generic device to the server. The application is at the same time mature and still frequently updated, and the system is known to be quite stable.

- Muse, a much more advanced tool, which is able to mix up to six audio channels and the "line in" of your audio card, and also to save the stream on your hard disk so you can reuse it (for instance creating a podcast);

- DyneBolic, finally, is a live Linux distribution which gives you all the tools you might need to create an Internet radio: inside it, of course, you will also find IceCast and Muse.

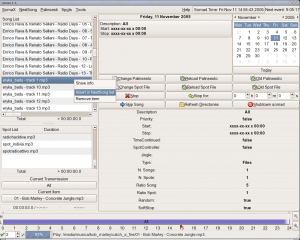

Manage the programs schedule

One of the main differences between an amateur radio and a professional one is the management of the programs schedule: the tools we have described till now, in fact, are not able to manage the programs depending on the current time or to play songs as a filler between different programs. Soma Suite is an application which can solve this problem, as it is able to create programs of different types: playlists (even randomly generated ones), audio streams (yours or taken from other radios), files, and so on.

Leave a trace on the Net

One of the main requirements for something worth calling a radio is to have listeners. However, how can we be heard if nobody knows us yet? Of course not broadcasting our IP address (maybe even a dynamic one!) every time we decide to stream. Luckily, there are a couple of solutions to this problem. The first one consists in advertising your radio inside some well-known lists: in fact, you can configure your Icecast server so that you can automatically send your current IP address to one of these lists whenever you run it. Icecast itself provides one of these listings, but of course you can choose different ones (even at the same time) to advertise your stream. The second solution consists in publishing your programs online using podcasts: this way, even those who could not follow you in realtime will be able to know you and tune at the right time to listen your next transmission.

Now that you have a radio, what can you broadcast? Even if the temptation of playing copyrighted music regardless of what majors think might be strong, why shall we do what any other website (from youtube to last.fm) is already doing? Talk, let your ideas be heard from the whole world and when you want to provide some good music choose free alternatives such as the ones you find here and here.

All for a question mark

Hey, did you know that the "where" keyword in the "where" clause in SPARQL is optional? Yep, you can check it here!

What does this mean? Well, instead of writing something like

select ?s ?p ?o where{

?s ?p ?o

}

you can write

select ?s ?p ?o {

?s ?p ?o

}

So, what's the problem? Well, there is no problem... But what if you forget a space and write the following?

select ?s ?p ?owhere {

?s ?p ?o

}

I guess you can understand what this means :) Of course it is very easy to spot this error, but what if you are building the query string like the following one?

$query = "select ?s ?p ?o";

$query .= "where {\n";

$query .= " ?s ?p ?o\n";

$query .= "}";

I know, I know, that is a quick and dirty way of doing it, and anyone who does this should pay the fee. But what if a poor student forgets a \n in the concatenation, the system does not return any error, and all the objects you were asking for are not returned? Be aware, friends, and don't repeat his mistake... ;-)

[thanks Sarp for providing me the chance to spot this error - it was a funny reversing exercise!]

Request for Comments: learn Internet standards by reading the documents that gave them birth

[Foreword: this is article number 3 of the new "hacks" series. Read here if you want to know more about this. A huuuuuge THANX to Aliosha who helped me with the translation of this article!]

A typical characteristic of hackers is the desire to understand -in the most intimate details- the way any machinery works. From this point of view, Internet is one of the most interesting objects of study, since it offers a huge variety of concepts to be learnt: just think how many basic formats and standards it relies on... And all the nice hacks we could perform once we understand the way they work!

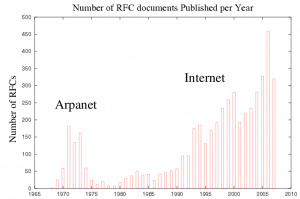

Luckily, most of these standards are published in freely accessible and easily obtainable notes: these documents are called RFC (Request for Comments), and have been used for almost 40 years to share information and observations regarding Internet formats, technologies, protocols and standards. The first RFC goes up to 1969, and since then more than 5500 have been published. Each one of them has to pass a difficult selection process lead by IETF (Internet Engineering Task Force), whose task is -as described in RFC 3935 and 4677- "to manage the Internet in such a way as to make the Internet work better."

John Postel, one of the authors of the first RFC and contributor to the project for 28 years, has always typed on his keyboard using only two fingers :-)

The RFC format

In order to become a RFC, a technical document must above all follow a very strict standard. At a first glance, it strikes us for its stark outlook: a simple text file with 73 columns, exclusively formatted with standard ASCII chars. On a second thought, it is easy to understand the reason of this choice: what format did not change since 1969 and can be visualized on any computer, no matter how old it is or which OS it runs?

Despite of the fact that many standards are now more than stable, the number of published RFCs is always increasing.

Every RFC has a header with information especially important for the reader. In addition to title, date and authors, there is also the unique serial number of the document, the relations with preceding documents, and its category. For example (see figure below), the most recent RFC that describes the SMTP protocol is 5321, updating RFC 1123, making 2821 obsolete, belonging to the "Standard Track" category. Similarly, if we read that a document has been "obsoleted" by another, it is better to look for this other one, since it will contain more up to date information.

The categories of RFCs are several, depending to the level of standardization reached by the described protocol or format at the moment of publication. The documents considered as the most official ones are split in three main categories: well-established standards (standard), drafts (draft) and standard proposals (proposed). There are also three non-standard classes, including experimental documents (experimental), informative ones (informational), and historical ones (historic). Finally, there is an "almost standard" category, containing the Best Current Practices (BCP), that is all those non official practices that are considered the most logical to adopt.

Finding the document we want

Now that we understand the meaning of RFC associated metadata (all those data not pertaining to the content of the document but to the document itself), we only have to take a peek inside the official IETF archive to see if there is information of interest for us. There are several methods to find an RFC: the first -and simplest- can be used when we know the document serial number and consists in opening the address http://www.ietf.org/rfc/rfcxxxx.txt, where xxxx is that number. For instance, the first RFC in computer history is available at http://www.ietf.org/rfc/rfc0001.txt.

Another search approach consists in starting from a protocol name and searching for all the documents that are related to it. To do this, we can use the list of Official Internet Protocol Standards that is available at http://www.rfc-editor.org/rfcxx00.html. Inside this list you can find the acronyms of many protocols, their full names and the standards they are related to: for instance, IP, ICMP, and IGMP protocols are described in different RFCs but they are all part of the same standard (number 5).

Finally, you can search documents according to their status or category: at http://www.rfc-editor.org/category.html you can find an index of RFCs ordered by publication status and, for each section, updated documents appear as black while obsolete ones are red, together with the id of the RFC which obsoleted them.

The tools we have just described should be enough in most of the cases: in fact, we usually know at least the name of the protocol we want to study, if not even the code of the RFC where it is described. However, whenever we just have a vague idea of the concepts that we want to learn, we can use the search engine available at http://www.rfc-editor.org/rfcsearch.html. If, for instance, we want to know something more about the encoding used for mail attachments, we can just search for "mail attachment" and obtain as a result the list of titles of the RFCs which deal with this topic (in this case, RDF 2183).

What should I read now?

When you have an archive like this available, the biggest problem you have to face is the huge quantity of information: a life is not enough to read all of these RFCs! Search options, fortunately, might help in filtering away everything which is not interesting for us. However, which could be good starting points for our research?

If we don't know where to begin, having a look at the basic protocols is always a good way to start: you can begin from the easiest, higher-level ones, such as the ones regarding email (POP3, IMAP, and SMTP, already partially described here), the Web (HTTP), or other famous application-level protocols (FTP, IRC, DNS, and so on). Transport and network protocols, such as TCP and IP, are more complicated but not less interesting than the others.

If, instead, you are searching for something simpler you can check informative RFCs: they actually contain many interesting documents, such as RFC 2151 (A Primer On Internet and TCP/IP Tools and Utilities) and 2504 (Users' Security Handbook). April Fool's documents deserve a special mention, being funny jokes written as formal RFCs (http://en.wikipedia.org/wiki/April_Fools%27_Day_RFC). Finally, if you still have problems with English (so, why are you reading this? ;-)) you might want to search the Internet for RFCs translated in your language. For instance, at http://rfc.altervista.org, you can find the Italian version of the RFCs describing the most common protocols.