New year, new you

... starting from the blog theme. Of course I have just downloaded a ready made one, otherwise with my taste you would have probably gotten something painful for your eyes ;-)

New year's resolutions? Plenty. But after last year's ones, my main resolution is no promises :-). And no creativity-killer posts: I'll try to stay far away from those topics I know will stop me from writing instead of incentivating me. I'll try to make this fun and useful, first of all for me. And if you find something useful here too, well, good for you ;-)

Fist post of the year, first after a long while... And to leave you with some more food for thought than the one you would have just by reading news about my wordpress themes, here you are:

Alon, Uri: "How To Choose a Good Scientific Problem". Molecular cell doi:10.1016/j.molcel.2009.09.013 (volume 35 issue 6 pp.726 - 728).

Here's the abstract:

"Choosing good problems is essential for being a good scientist. But what is a good problem, and how do you choose one? The subject is not usually discussed explicitly within our profession. Scientists are expected to be smart enough to figure it out on their own and through the observation of their teachers. This lack of explicit discussion leaves a vacuum that can lead to approaches such as choosing problems that can give results that merit publication in valued journals, resulting in a job and tenure."

I found the paper very inspiring and I agreed with most of it. Here are few sentences I particularly liked:

- "A lab is a nurturing environment that aims to maximize the potential of students as scientists and as human beings."

- "The projects that a particular researcher finds interesting are an expression of a personal filter, a way of perceiving the world. This filter is associated with a set of values: the beliefs of what is good, beautiful, and true versus what is bad, ugly, and false."

- "... when one can achieve self-expression in science, work becomes revitalizing, self- driven, and laden with personal meaning."

What do you think about it? I think that this self-expression, this possibility of projecting my personal values in my work is one of the main reasons I have chosen to do it. Of course, this is also constraining me somehow: what happens when I work with others? What if there is a clash of values between me and my collaborators? Finally, one last big question arises: how much is this applicable for other job? Is there a chance for everyone to achieve this self-expression or only for someone? What about those who can't?

Ok, enough food for today ;-) One last link, which you might find interesting if you liked this paper too: Uri Alon Lab homepage, where you can find more materials for nurturing scientists.

Take care, have a great 2012!

Slides for “An integrated approach to discover tag semantics”

The slides of my presentation at SAC 2011 are available on SlideShare:

Just to have an idea on what the presentation is about, here's an excerpt of the paper's abstract and the link to the paper itself.

New paper: An integrated approach to discover tag semantics

Antonina Dattolo, Davide Eynard, and Luca Mazzola. An Integrated Approach to Discover Tag Semantics. 26th Annual ACM Symposium on Applied Computing, vol. 1, pp. 814-820. Taichung, Taiwan, March 2011. From the abstract:

"Tag-based systems have become very common for online classification thanks to their intrinsic advantages such as self-organization and rapid evolution. However, they are still affected by some issues that limit their utility, mainly due to the inherent ambiguity in the semantics of tags. Synonyms, homonyms, and polysemous words, while not harmful for the casual user, strongly affect the quality of search results and the performances of tag-based recommendation systems. In this paper we rely on the concept of tag relatedness in order to study small groups of similar tags and detect relationships between them. This approach is grounded on a model that builds upon an edge-colored multigraph of users, tags, and resources. To put our thoughts in practice, we present a modular and extensible framework of analysis for discovering synonyms, homonyms and hierarchical relationships amongst sets of tags. Some initial results of its application to the delicious database are presented, showing that such an approach could be useful to solve some of the well known problems of folksonomies".

Paper is available here. Enjoy! ;)

Harvesting Online Content: An Analysis of Hotel Review Websites

A new paper is out:

Marchiori, E., Eynard, D., Inversini, A., Cantoni, L., Cerretti, F. (2011) Harvesting Online Content: An Analysis of Hotel Review Websites. In R. Law, M. Fuchs & Francesco Ricci (Eds.), Information and Communication Technologies in Tourism 2011 – Proceedings of the International Conference in Innsbruck, Austria (pp. 101-112). Wien: Springer.

Find the paper here ;)

WOEID to Wikipedia reconciliation

For a project we are developing at PoliMI/USI, we are using Yahoo! APIs to get data (photos and tags associated to these photos) about a city. We thought it would be nice to provide, together with this information, also a link or an excerpt from the Wikipedia page that matches the specific city. However, we found that the matching between Yahoo's WOEIDs and Wikipedia articles is far from trivial...

First of all, just two words on WOEIDs: they are unique, 32-bit identifiers used within Yahoo! GeoPlanet to refer to all geo-permanent named places on Earth. WOEIDs can be used to refer to differently sized places, from towns to Countries or even continents (i.e. Europe is 24865675). A more in-depth explanation of this can be found in the Key Concepts page within GeoPlanet documentation, and an interesting introductory blog post with examples to play with is available here. Note that, however, you now need a valid Yahoo! application id to test these APIs (which means you should be registered in the Yahoo! developer network and then get a new appid by creating a new project).

One cool aspect of WOEIDs (as for other geographical ids such as GeoNames' ones) is that you can use them to disambiguate the name of a city you are referring to: for instance, you have Milan and you want to make sure you are referring to Milano, Italy and not to the city of Milan, Michigan. The two cities have two different WOEIDs, so when you are using one of them you exactly know which one of the two you are talking about. A similar thing happens when you search for Milan (or any other ambiguous city name) on Wikipedia: most of the times you will be automatically redirected to the most popular article, but you can always search for its disambiguation page (here is the example for Milan) and choose between the different articles that are listed inside it.

Of course, the whole idea of having standard, global, unique identifiers for things in the real world is a great one per se, and being able to use it for disambiguation is only one aspect of it. While disambiguation can be (often, but not always!) easy at the human level, where the context and the background of the people who communicate help them in understanding which entity a particular name refers to, this does not hold for machines. Having unique identifiers saves machines from the need of disambiguating, but also allows them to easily link data between different sources, provided they all use the same standard for identification. And linking data, that is making connections between things that were not connected before, is a first form of inference, a very simple but also a very useful one that allows us to get new knowledge from the one we originally had. Thus, what makes these unique identifiers really useful is not only the fact that they are unique. Uniqueness allows for disambiguating, but is not sufficient to link a data source to others. To do this, identifiers also need to be shared between different systems and knowledge repositories: the more the same id is used across knowledge bases, the easier it is to make connections between them.

What happens when two systems, instead, use different ids? Well, unless somebody decides to map the ids between the two systems, there are few possibilities of getting something useful out of them. This is the reason why the reconciliation of objects across different systems is so useful: once you state that their two ids are equivalent, then you can perform all the connections that you would do if the objects were using the same id. This is the main reason why matching WOEIDs for cities with their Wikipedia pages would be nice, as I wrote at the beginning of this post.

Wikipedia articles are already disambiguated (except, of course, for disambiguation pages) and their names can be used as unique identifiers. For instance, DBPedia uses article names as a part of its URIs (see, for instance, information about the entities Milan and Milan(disambiguation)). However, what we found is that there is no trivial way to match Wikipedia articles with WOEIDs: despite what others say on the Web, we found no 100% working solution. Actually, the ones who at least return something are pretty far from that 100% too: Wikilocation works fine with monuments or geographical elements but not with large cities, while Yahoo! APIs themselves have a direct concordance with Wikipedia pages, but according to the documentation this is limited to airports and towns within the US.

The solution to this problem is a mashup approach, feeding the information returned by a Yahoo! WOEID-based query to another data source capable of dealing with Wikipedia pages. The first experiment I tried was to query DBPedia, searching for articles matching Places with the same name and a geolocation contained in the boundingBox. The script I built is available here (remember: to make it work, you need to change it entering a valid Yahoo! appid) and performs the following SPARQL query on DBPedia:

SELECT DISTINCT ?page ?fbase WHERE {

?city a <http://dbpedia.org/ontology/Place> .

?city foaf:page ?page .

?city <http://www.w3.org/2003/01/geo/wgs84_pos#lat> ?lat .

?city <http://www.w3.org/2003/01/geo/wgs84_pos#long> ?long .

?city rdfs:label ?label .

?city owl:sameAs ?fbase .

FILTER (?lat > "45.40736"^^xsd:float) .

FILTER (?lat < "45.547058"^^xsd:float) .

FILTER (?long > "9.07683"^^xsd:float) .

FILTER (?long < "9.2763"^^xsd:float) .

FILTER (regex(str(?label), "^Milan($|,.*)")) .

FILTER (regex(?fbase, "http://rdf.freebase.com/ns/")) .

}

Basically, what it gets are the Wikipedia page and the Freebase URI for a place called "like" the one we are searching, where "like" means either exactly the same name ("Milan") or one which still begins with the specified name but is followed by a comma and some additional text (i.e. "Milan, Italy"). This is to take into account cities whose Wikipedia page name also contains the Country they belong to. Some more notes are required to better understand how this works:

- I am querying for articles matching "Places" and not "Cities" because on DBPedia not all the cities are categorized as such (data is still not very consistent);

- I am matching rdfs:label for the name of the City, but unfortunately not all cities have such a property;

- requiring the Wikipedia article to have equivalent URIs related with the owl:sameAs property is kind of strict, but I saw that most of the cities had not only one such URI, but also most of the times the one from Freebase I was searching for.

This solution, of course, is still kind of naive. I have tested it with a list WOEIDs of the top 233 cities around the world and its recall is pretty bad: out of 233 cities the empty results were 96, which corresponds to a recall lower than 60%. The reasons of this are many: sometimes the geographic coordinates of the cities in Wikipedia are just out of the bounding box provided by GeoPlanet; other times the city name returned by Yahoo! does not belong to any of the labels provided by DBPedia, or no rdfs:label property is present at all; some cities are not even categorized as Places; very often accents or alternative spellings make the city name (which usually is returned by Yahoo! without special characters) untraceable within DBPedia; and so on.

Trying to find an alternative approach, I reverted to good old Freebase. Its api/service/search API allows to query the full text index of Metaweb's content base for a city name or part of it, returning all the topics whose name or alias match it and ranking them according to different parameters, including their popularity in Freebase and Wikipedia. This is a really powerful and versatile tool and I suggest everyone who is interested in it to check its online documentation to get an idea about its potential. The script I built is very similar to the previous one: the only difference is that, after the query to Yahoo! APIs, it queries Freebase instead of DBPedia. The request it sends to the search API is like the following one:

where (like in the previous script) city name and bounding box coordinates are provided by Yahoo! APIs. Here are some notes to better understand the API call:

- the city name is provided as the query parameter, while type is set to /location/citytown to get only the cities from Freebase. In this case, I found that every city I was querying for was correctly assigned this type;

- the mql_output parameter specifies what you want in Freebase's response. In my case, I just asked for Wikipedia ID (asking for the "key" whose "namespace" was /wikipedia/en_id). Speaking about IDs, Metaweb has done a great job in reconciliating entities from different sources and already provides plenty of unique identifiers for its topics. For instance, we could get not only Wikipedia and Freebase own IDs here, but also the ones from Geonames if we wanted to (this is left to the reader as an exercise ;)). If you want to know more about this, just check the Id documentation page on Freebase wiki;

- the mql_filter parameter allows you to specify some constraints to filter data before they are returned by the system. This is very useful for us, as we can put our constraints on geographic coordinates here. I also specified the type /location/location to "cast" results on it, as it is the one which has the geolocation property. Finally, I repeated the constraint on the Wikipedia key which is also present in the output, as not all the topics have this kind of key and the API wants us to filter them away in advance.

Luckily, in this case the results were much more satisfying: only 9 out of 233 cities were not found, giving us a recall higher than 96%. The reasons why those cities were missing follow:

- three cities did not have the specified name as one of their alternative spellings;

- four cities had non-matching coordinates (this can be due either to Metaweb's data or to Yahoo's bounding boxes, however after a quick check it seems that Metaweb's are fine);

- two cities (Buzios and Singapore) just did not exist as cities in Freebase.

The good news is that, apart from the last case, the other ones can be easily fixed just by updating Freebase topics: for instance one city (Benidorm) just did not have any geographic coordinates, so (bow to the mighty power of the crowd, and of Freebase that supports it!) I just added them taking the values from Wikipedia and now the tool works fine with it. Of course, I would not suggest anybody to run my 74-lines script now to reconciliate the WOEIDs of all the cities in the World and then manually fix the empty results, however this gives us hope on the fact that, with some more programming effort, this reconciliation could be possible without too much human involvement.

So, I'm pretty satisfied right now. At least for our small project (which will probably become the subject of one blog post sooner or later ;)) we got what we needed, and then who knows, maybe with the help of someone we could make the script better and start adding WOEIDs to cities in Freebase... what do you think about this?

I have prepared a zip file with all the material I talked about in this post, so you don't have to follow too many links to get all you need. In the zip you will find:

- woe2wp.pl and woe2wpFB.pl, the two perl scripts;

- test*.pl, the two test scripts that run woe2wp or woe2wpFB over the list of WOEIDs provided in the following file;

- woeids.txt, the list of 233 WOEIDs I tested the scripts with;

- output*.txt, the (commented) outputs of the two test scripts.

Here is the zip package. Have fun ;)

Semantic Annotations Part 1: an introduction

This week's post is about a topic I'm really interested in, that is semantic annotations. It is so interesting for me that in the last two years, despite having jobs not directly involving this subject, I have tried to learn more and work on this topic anyway, using my spare time. Why is that so important for me? Well, it might become the same for you if you like its basic concept, that is allowing anyone to write anything about anything else. Moreover, in a perfect (or at least well designed ;)) world semantic annotations would also allow anyone to only get the information written by people they trust/like (or that authorized them), without being overwhelmed by unuseful, wrong, or bad data.

A big problem about semantic annotations is that the subject itself, from a researcher's point of view, is really broad and any kind of breadth-first approach on the topic tends to leave you with pretty shallow concepts to deal with. At the same time, going depth-first while ignoring some aspects of annotations will only make your approach seem too simplistic to people who are experts in other aspects (or who already tried the breadth-first approach ;)). I think this is the main reason why my work on semantic annotations has become more and more like the development of Duke Nukem Forever... Which, in case you don't know, is a great example of how trying to reach absolute perfection -especially in a field where everything evolves so quickly- keeps you more and more far from having something simply done. For anyone who wants to read something about this, I'd really suggest you to give a look at this article, which I found really enlightening.

So, trying to follow the call to "release early, release often", I'll post a series of articles about semantic annotations here. I have decided to skip scientific venues for a while, at least till I have something that is at the same time deep and broad enough. And if I never reach that... Well, you will have read everything I've done in the meanwhile and I hope something good will come out of there anyway.

What is an annotation?

To start understanding semantic annotations, I guess I should first clarify what an annotation is. Annotations are notes about something: if you are reading a book, you can write them in the page margins; if someone parks a car out of your garage, you can leave one on her windshield (well, better if you directly write that on the windshield, so she'll remember it next time ;)); or, for example, you can add an annotation to food in the fridge with its expiration date. What is common between these annotations is that they are all written on a medium (paper, windshield, whatever!) and they are physically placed somewhere. Moreover, they have been written by someone in a specific moment in time, and they comment something in specific (some text within the book, the act of leaving a car in the wrong place, the duration of some food).

What is a computer annotation?

This is what happens "in real life", but what about computer annotations? As everything is data (some time ago I would have said "Everything is byte"), annotations become metadata, that is data about data. For them we would like to be able to maintain some of the characteristics of the "physical" annotations. They are useful if we can see them in the context of the piece of data they are annotating (what use is an expiration date if we don't know which food it refers to?) and if we can know their authors and dates of creation. There is no "physical medium" for them, but nothing prevents us from adding some other meta-meta-data (that is, data about the annotation itself) that customizes it to become some sort of electronic post-it, a formal note, an audio file, or whatever else we can imagine.

Computer annotation systems are far from new: think, for instance, about the concept of annotations in documents. However, they get a completely new meaning when a medium like the Web becomes available: in this case we talk about Web annotations, every resource with a URL can be uniquely identified and using XPath it is also possible to access specific parts of a Web page. Collecting Web annotations makes it possible, whenever a web page is opened, to check whether metadata exist for it and display them contextually. Systems like Google Sidewiki allow exactly this kind of operations, but they are not the only ones available: tags are nothing else than simple annotations added to generic URLs (such as in delicious), photos (Flickr), and so on; ratings are typically associated to products, but which are often associated with unique URLs within a website, and systems like Revyu allow you to rate basically anything that has a URI. Finally, there are even games exploiting the concept of Web annotations like The Nethernet.

What is a semantic annotation?

A semantic annotation is a computer annotation that relies on semantics for its definition. And here's the rub: this definition is so wide that it can actually cover many different families of annotations. For instance:

- semantics can be used to define information about the annotation itself in a structured way (i.e. the author, the date, and so on). An example of such an annotation system is Annotea;

- semantics can be used to univocally define the meaning of the content of the annotation. For instance, if you tag something "Turkey" nobody will be ever able to know if you were talking about the animal or the country, while if you tag it with Wordnet synsets 01794158 and 09039411 you'll be able to disambiguate;

- semantics can be used to (also) define the format of the contents of the annotation, meaning that the "body" of the annotation is not a simple unstructured text, but it contains RDF triples or some kind of structured information (the semantic annotation ontology I co-developed last year at Hypios follows this idea).

In the next episodes...

Ehmm... I guess I might have lost someone here, but trust me... there's nothing too difficult, it is just a matter of entering a little more into the details. As the "semantics" part requires a more in-depth description, I'll leave it to the next "episode" of this series. My idea, at least for now, is the following:

- Semantic Annotations Part 2: where's the semantics?

- Semantic Annotations Part 3: early prototypes for a semantic annotation system

- Semantic Annotations Part 4: the SAnno project

I'm pretty sure there will be changes in this list, but I'll make sure they will be reflected here so you will always be able to access all the other articles from every post belonging to this series.

The cookie jar metaphor

Searching for "cookie jar metaphor" on Google you will find the well known "hand in the cookie jar" one, so coming up with a new metaphor that relies on the same concept might sound pretty ambitious... However, it came to my mind like this and I do not want to change it, no matter if it will be drowned in an ocean of unrelated information.

The thing is: suppose I have been using a cookie jar to save my money for a while and now I have collected a rather good amount of it. One day I decide I want to give my money to those people who really need it and start sharing the contents of my cookie jar, so I open it, I put it on the table in my kitchen, I put a sign next to it saying "Free money, take as much as you need", then I leave my door unlocked so anyone could enter and get that money.

Would that money be really shared with others? Would that be shared with others who need it?

I have been writing free code basically since I write code. Every time I released a new piece of software (well, usually not amazing apps) I uploaded it somewhere and put a link on my website. Well, for a while my website has been a hacker's challenge closed to search engines and most of Internet users :-), but apart from that I have tried nevertheless to share my discoveries in one way or another. When I started to do research, I tried to do the same with my discoveries, tools, and datasets. I actually had a chance to share some of my stuff with some people and every time it has been a win-win situation, as I learned from others at least as much as they did from me.

What I have learned, however, is that most of the time I have just opened my cookie jar on the table, not being able to reach many people outside, and most of all not being able to reach the ones who might have been more interested in what I was doing. I always thought that pushing too much my discoveries and accomplishments was more like showing off rather than sharing what I did, and supposed that if anyone was interested in the same things then they would have probably found my work anyway.

Well, I guess I was SO wrong about this. Telling people around that I have that open cookie jar is my duty if what I want to do is share the money I have collected in it. So I think that this kind of communication becomes part of free software as much as writing code, and part of research as much as studying others' work and writing papers about yours.

So now here's the problem: how to share my stuff more effectively? I guess being more active within specific communities (those who might get the best out of what I share), using other channels to communicate (i.e. I sometimes twit about my blog updates, I guess I'll do that more often - do you have #hashtags to suggest?), but most of all finding the time to do all of this.

Are you doing this? If so, do you have any comments or suggestions? Any feedback is welcome, either here or by any other electronic or real life means ;-)

New (old) paper: “On the use of correspondence analysis to learn seed ontologies from text”

Here is another work done in the last year(s), and here is its story. In January, 2009, as soon as I finished with my PhD, I've been put in contact with a company searching for people to implement Fionn Murtagh's Correspondence Analysis methodology for the automatic extraction of ontologies from text. After clarifying my position about it (that is, that what was extracted were just taxonomies and that I thought that the process should have become semi-automatic), I started a 10 months project in my university, officially funded by that company. I say "officially" because, while I regularly received my paycheck each month from the university, the company does not seem to have payed yet, after almost two years from the beginning of the project. Well, I guess they are probably just late and I am sure they will eventually do that, right?

By the way, the project was interesting even if it started as just the development of someone else's approach. The good point is that it provided us some interesting insights about how ontology extraction from text works in practice, what are the real world problems you have to face and how to address them. And the best thing is that, after the end of the project, we found we had enough enthusiasm (and most important a Master student, Fabio Marfia... thanks! ;)) to continue that.

Fabio has done a great work, taking the tool I had developed, expanding it with new functionalities, and testing them with real world examples. The outcome of our work, together with Fabio's graduation of course (you can find material about his thesis here), is the paper "On the use of correspondence analysis to learn seed ontologies from text" we wrote together with Matteo Matteucci. You can find the paper here, while here you can download its poster.

The work is not finished yet: there are still some aspects of the project that we would like to delve deeper into and there are still things we have not shared about it. It is just a matter of time, however, so stay tuned ;-)

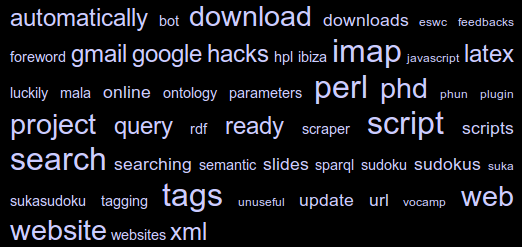

Perl Hacks: infogain-based term cloud

This is one of the very first tools I have developed in my first year of post-doc. It took a while to publish it as it was not clear what I could disclose of the project that was funding me. Now the project has ended and, after more than one year, the funding company still has not funded anything ;-) Moreover, this is something very far from the final results that we obtained, so I guess I could finally share it.

Rather than a real research tool, this is more like a quick hack that I built up to show how we could use Information Gain to extract "interesting" words from a collection of documents, and term frequencies to show them in a cloud. I have called it a "term cloud" because, even if it looks like the well-known tag clouds, it is not built up with tags but with terms that are automatically extracted from a corpus of documents.

The tool is called "rain" as it is based on rainbow, an application built on top of the "bow" libraries that performs statistical text classification. The basic idea is that we use two sets of documents: the training set is used to instruct the system about what can be considered "common knowledge"; the test set is used to provide documents about the specific domain of knowledge we are interested in. The result is that the words which more likely discriminate the test set from the training one are selected, and their occurrences are used to build the final cloud.

All is done within a pretty small perl script, which does not do much more than calling the rainbow tool (which has to be installed first!) and using its output to perform calculation and build an HTML page with the generated cloud. You can download the script from these two locations:

- here you can find a barebone version of the script, which only contains the script and few test documents collections. The tool works (that is it does not return errors) even without training data, but will not perform fine unless it is properly trained. You will also have to download and install rainbow before you can use it;

- here you can find an "all inclusive" version of the script. It is much bigger but it provides: the ".deb" file to install rainbow (don't be frightened by its release date, it still works with Ubuntu Maverick!), and a training set built by collecting all the posts from the "20_newsgroups" data set.

How can I run the rain tool?

Supposing you are using the "all inclusive" version, that is you already have your training data and rainbow installed, running the tool is easy as writing

perl rain.pl <path_to_test_dir>

The script parameters can be modified within the script itself (see the following excerpt from the script source):

my $TERMS = 50; # size of the pool (top words by infogain)

my $TAGS = 50; # final number of tags (top words by occurrence)

my $SIZENUM = 6; # number of size classes to be used in the HTML document,

# represented as different font sizes in the CSS

my $FIREFOX_BIN = '/usr/bin/firefox'; # path to browser binary

# (if present, firefox will be called to open the HTML file)

my $RAINBOW_BIN = '/usr/bin/rainbow'; # path to rainbow binary

my $DIR_MODEL = './results/model'; # used internally by rainbow

my $DIR_DATA = './train'; # path to training dir

my $DIR_TEST = $ARGV[0]; # path to test dir

my $FILE_STOPLIST = './stopwords.txt'; # stopwords file

my $FILE_TMPDATA = './results/data.txt'; # file where data generated

# by rainbow will be dumped

my $HTML_TEMPLATE = './template.html'; # template file used to generate the

# tag-cloud html page

my $HTML_OUTPUT = './results/output.html'; # final html page

As you can see, there are quite a lot of parameters but the script can also be run just out of the box: for instance, if you type

perl rain.pl ./test/folksonomies

you will see the cloud shown in Figure 1. And now, here is another term cloud built using my own blog posts as a text corpus:

New (old) paper: “GVIS: A framework for graphical mashups of heterogeneous sources to support data interpretation”

I know this is not a recent paper (it has been presented in May), but I am slowly doing a recap of what I have done during the last year and this is one of the updates you might have missed. "GVIS: A framework for graphical mashups of heterogeneous sources to support data interpretation", by Luca Mazzola, me, and Riccardo Mazza, is the first paper (and definitely not the last, as I have already written another!) with Luca, and it has been a great fun for me. We had a chance to merge our works (his modular architecture and my semantic models and tools) to obtain something new, that is the visualization of a user profile based on her browsing history and tags retrieved from Delicious.

Curious about it? You can find the document here (local copy: here) and the slides of Luca's presentation here.