Computation In The Cloud

From IT

Computation in the cloud is using the Internet as a huge collection of CPUs (central processing units) to accomplish processing-intensive tasks. This form of computing is a mixture of cloud computing (CC) and high performance computing (HPC). This document will describe and evaluate computation in the cloud, as well as some of its related side topics, such as parallelization and virtualization. The following topics will be examined:

- Overview of high performance computing – why is it needed and what technologies have been used to fill this computational need?

- Overview of cloud computing – what is it and why is it important?

- Benefits of performing high-performance computing in the cloud

- Concerns related to high-performance cloud computing (HPCC)

- HPCC providers – who are the vendors and providers that allow for HPCC?

- Case studies – where has HPCC been used, and was it a success?

Contents |

Evolution of High Performance Computing

The Problem

There is a common challenge in many of the applied sciences (e.g. engineering, medical physics, etc.): scientific computing involves complex mathematical models to solve scientific and engineering problems [1]. Complex models are more realistic and produce more accurate results because they account for a greater number of variables and factors in the experimental design. However, the more complex a model is, the more computational power is needed to generate results. Oftentimes the computational need exceeds the processing power of a single computer.

Moreover, it should be noted that this dilemma is not unique to the applied sciences. Many other areas encounter this same issue of complexity. Financial analyses and simulations may also require an extraordinary amount of computational power, e.g. to make predictions and forecasts. Certain software games or simulations may also be so processing-intensive that they require more computational power than one computer can deliver.

Two basic approaches can be used to address the complexity problem: either simplify the model or increase computational power [1]. Simplifying the mathematical model tends to lower computational need, but this also results in less accurate solutions. Increasing computational power, on the other hand, allows the user to keep model complexity and generate highly-accurate results. This approach of increased processing power is the focus of this report, and the following section defines "high-performance computing" (HPC).

What is HPC, and how is it implemented?

High performance computing is the use of parallel processing for running advanced application programs efficiently, reliably, and quickly. HPC uses supercomputers and computer clusters to solve advanced computational problems [2]. The major technologies that have been used for high performance computing are described below:

As mentioned above, supercomputers are one way of implementing HPC. These are single computers with high-end specifications and enhanced capabilities. There has been a recent shift from increasing CPU speeds to creating machines with multiple CPUs (multi-core), and this approach is clearly evident in most of today’s supercomputers. Since there is a physical limitation to how fast CPU speeds can be, manufacturers have explored other means of increasing performance. The multi-core design enhances performance by parallelizing processing. Thus, tasks no longer have to be run in serial, but can be divided into sub-tasks and run together at the same time.

Aside from multi-core, computational processing can also be enhanced with the use of GPUs (Graphical Processing Units). GPUs have a large number of cores and offer parallel hardware at low costs. When the GPU is used for computations in applications other than graphics, it is called GPGPU (General Purpose computing on Graphical Processing Units). However, due to specialized architecture, the GPU cannot perform all the computational tasks of a CPU and may not be suited to implement all algorithms [3]. Furthermore, GPGPU can be used in traditional computing clusters (with CPUs).

A computer cluster is a network of computers that is designed to process large tasks in parallel. This setup utilizes the divide-and-conquer approach: a processing-intensive task is divided into sub-tasks, the computers in the cluster (computing nodes) execute their individual sub-tasks, and the results are aggregated at the end. A computer cluster is typically configured with a master node, which manages the computing nodes (initialization, tasking, queuing, scheduling, etc.) [4]. While parallelization does improve performance, computer clusters do have some drawbacks:

- Configuration and maintenance – time-consuming and expensive

- "Bursty" usage – the clusters tend to be poorly utilized. There are lengthy idle spells and isolated peak times (over-queued) [3].

Another approach to implementing HPC is via grid computing, which is an extension of a traditional computer cluster. Grid computing is understood to be on-demand horse power to perform large-scale experiments by relying on a network of machines [1]. While traditional computing clusters are typically in-house (in one location), grid computing is geographically dispersed. Grid computing relies on a large number of resources belonging to different administrative domains, and it uses dynamic discovery services to find the best set of machines for the computational need [1]. Even though there are numerous successful grid computing networks (especially in the scientific community), there are some drawbacks to this computing paradigm:

- Bureaucracy – since the computing resources are shared, users must submit proposals to use the grids. This process can be time-consuming and typically prevents smaller research teams from using the service [1].

- Technical limitations – grid networks have pre-defined computing environments with prescribed software tools and APIs (Application Programming Interfaces). This inflexible setup may not meet all the needs of a scientific project and could require the code to be rewritten for the grid [1].

However, the latest trend in high-performance computing is using the cloud. Like clusters and grids, cloud computing exploits economies of scale to deliver advanced capabilities; however, unlike clusters, cloud resources are non-specific and do not guarantee identical properties from run to run [2]. This virtual machine abstraction of the cloud and commercial implementation of the cloud infrastructure are key differences between cloud and grid computing [3]. To help clarify the usage and advantages of cloud computing (with respect to HPC), the next section provides a general overview of this technology.

Overview of the Cloud

Definition and Characteristics

Cloud computing can be confusing because it is an abstract concept and has been assigned various meanings over the years. In fact, even Oracle’s CEO, Larry Ellison, vented his frustration with the term when he said, "The interesting thing about cloud computing is that we’ve redefined cloud computing to include everything that we already do...I don't understand what we could do differently in the light of cloud computing other than change the wording of some of our ads" [5].

Looking at several different definitions will help describe the underlying idea:

- Cloud computing (CC) is a computational paradigm in which dynamically scalable, virtualized resources are provided as a service over the Internet [6].

- CC is programs, tools, or services using shared resources available over a network in lieu of being local [7].

- CC is Internet-based computing, whereby shared resources, software, and information are provided to computers and other devices on-demand, like a public utility [2].

- CC is a technology aiming to deliver on-demand information technology (IT) resources on a pay-per-use basis (over the Internet) [1].

Thus, from these definitions several key characteristics of cloud computing can be derived [2]:

- On-demand self-service – information technology services are available all the time and accessible from anywhere.

- Broad network access – CC is only possible with a network (i.e. the Internet).

- Resource pooling – resources are shared and typically virtualized (abstracted).

- Rapid elasticity – resources are dynamically scalable, which means that they can immediately grow or shrink, depending on demand. There is also the appearance of infinite computing resources [5].

- Measured service – the cost of the service depends on usage. CC typically employs a pay-as-you-go pricing model.

Cloud Computing Typologies

The numerous definitions of cloud computing have also resulted in a number of different CC classifications. One basic classification is storage, applications, and computing. Cloud computing offers all three of these types of services to consumers. The Simple Storage Service (S3) from Amazon is an example of an online storage utility. Such websites as Yahoo!Mail, GMail, and GoogleDocs are examples of cloud computing applications. The final category, computing, is represented by companies and products such as Rackspace, Microsoft Azure, and Google AppEngine [7].

Cloud computing is also classified as public, private, and hybrid. A public cloud offers services available to everyone. Public clouds have the strengths of improved scalability and lower costs (for up-front and maintenance expenditures). On the other hand, a private cloud, which is private to an organization, has the advantages of being more flexible (can be adapted to the organization’s needs), more secure, and cheaper with heavy usage [7]. The third kind of cloud, a hybrid, is a composition of private and public clouds that remain unique entities but are bound together [8]. A hybrid cloud seeks the best of both worlds by taking the middle ground.

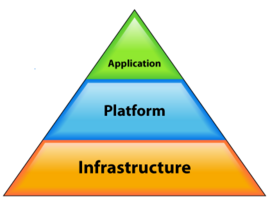

Another classification divides cloud computing into different types of services. Infrastructure-as-a-Service (IaaS), Platform-as-a-Service (PaaS), and Software-as-a-Service (SaaS) are the three big divisions. A brief description of each is provided below, but it should be noted that some experts do not fully agree with this well-known classification. For example, Armbrust et al. argue that the distinction between IaaS and PaaS is too vague for these services to be considered separately—rather they should be grouped together [5].

- IaaS, sometimes referred to as Hardware-as-a-Service, delivers information technology (IT) infrastructure based on virtual and physical resources, e.g. CPU type and power, memory, storage, and operating system. IaaS is highly configurable, and users can simply set up their system on top of the cloud infrastructure, which is hosted and managed by a dedicated provider [1].

- PaaS provides a development platform (application framework and set of APIs) where users can create their own cloud applications [1]. In PaaS the environment is predefined, which may require users to significantly alter existing software structures [6]. Google (AppEngine) and Microsoft (Azure) are two big players in this field [1].

- SaaS is the top end of the cloud computing stack; it provides end users with integrated services comprising hardware, development platforms, and applications. The services are hosted in the cloud and are accessible anytime and anywhere. Users are unable to modify the functionality of the services, since they have been created for specific purposes. Examples of SaaS include GoogleDocs and Salesforce.com business applications (Customer Relationship Management, Project Management tools) [1].

The focus of this report is how Infrastructure-as-a-Service can be used in high performance computing. This kind of computation is typically done in a public cloud, which maximizes advantages related to economies of scale and maintenance, as well as other benefits discussed in the following section.

HPCC Benefits

Conducting high performance computing in the cloud is a powerful combination with several advantages. Some of the key benefits are discussed below.

The scalability of cloud computing is a major advantage in high performance computing. As mentioned before, a downside of traditional computer clusters is that they tend to be poorly utilized. This means that the outstanding computational power provided by clusters is oftentimes not needed. Cloud computing solves this problem by dynamically adjusting the level of processing power based on the computational need. Thus, when the workload peaks, cloud computing can add more resources, and when the task is finished, these resources can be released [1]. This elasticity results in transference of risk (of over- and under-provisioning) based on workload and eliminates the need to plan far ahead for provisioning [5]. Moreover, cloud computing’s capacity (especially in a public cloud) is practically infinite. Thus, the scalability of cloud computing perfectly matches the unique computational requirements of most high performance tasks, which are extremely processing intensive, but only for a relatively short period of time.

The idea of flexibility is another benefit afforded by cloud computing. Flexibility is related to scalability, but it is more generic and encompasses other notions, such as the cost structure, which will be presented later in this section. Cloud computing allows for flexibility in planning (no capacity planning needed) and in customization (execution environment can be modified as needed) [1]. Cloud computing is also well-suited for one-off calculations, where traditional clusters might be desirable but infeasible [3]. This all-around flexibility gives cloud computing a leg up on other HPC technologies.

Users of cloud computing also benefit from computer maintenance. In fact, the maintenance is not even performed by the user, which saves on time, money, and the occasional frustrations associated with technical troubleshooting. Rather, the cloud computing provider must update and maintain its machines. Letting the owner (and subject matter expert) perform the maintenance allows the user to bypass the difficult setup and operation of traditional HPC configurations [1].

Accessibility makes cloud computing a compelling technology. Not only are services available anytime, from anywhere in the world (this, of course, presumes an Internet connection), but cloud computing also establishes low entrance barriers for high performance computing. Historically, only a privileged few had access to HPC. The costs associated with supercomputers and computing clusters was high and prevented many users from performing processing-intensive calculations. However, cloud computing changes that paradigm by eliminating the up-front commitment (in this case, the high cost of setting up a traditional HPC environment) [5]. Likewise, no time must be spent on procuring a high-end computer system because cloud computing makes purchasing, maintaining, and even understanding HPC hardware unnecessary [2][9]. Virtualization abstracts the details of network and hardware architectures, thereby making them transparent to the user [2][3]. Thus, cloud computing significantly reduces complexity and cost, which allows easy access to large, distributed systems and to computational resources that would otherwise not be available [3][9].

Another benefit is the experience and expertise gained from selecting a cloud computing provider. This is especially the case for vendors that specialize in offering high performance computing in the cloud (the so-called HPC-as-a-Service, which will be discussed later in the report). An HPC-as-a-Service provider not only sets-up and maintains the system environment, but they have expertise in HPC optimizations and can engineer the system for peak demand. Thus, the user experiences a faster time-to-results, especially for computational requirements that greatly exceed existing computing capacity [2].

Arguably the greatest advantage of using the cloud for HPC is the pricing model. Since cloud computing utilizes a pay-as-you-go cost structure, users do not have to worry about capital, maintenance, and upgrade costs for sophisticated in-house hardware (like with traditional clusters). Cloud computing furthermore eliminates costs associated with personnel, utility, equipment housing, and insurance [3]. There are no continuing operating costs for the user because these have been transferred to the cloud provider. The user must only pay for the processing power used, and this pricing model makes high performance computing economically feasible and affordable. Cloud computing’s cost associativity (no cost penalty for using X times the computation for 1/X the time) allows for large-scale processing power without an associated large price tag, which is the ultimate benefit [3][5].

HPCC Concerns

Even though there are many positives to using high performance cloud computing, there are also some negatives. Some of the major concerns are listed below.

Reliability and repeatability are concerns in high performance cloud computing [2]. Since the computing has effectively been outsourced to an external cloud provider, users are no longer in complete control of their technology. In the case of time-bounded applications, a cloud computing solution may not provide enough reliability to minimize risk [2]. The virtualization, or abstraction, of computing resources also affects repeatability. For example, some high performance scientific computations may need to be repeated as part of an experimental design. However, cloud computing is dynamic—virtualized computational resources are adjusted based on need. Thus, there is no guarantee that the system environment is identical from run to run. Thus, repeatability is not assured, which may discourage some users from using HPC in the cloud.

Another issue in high performance cloud computing is data privacy and security. Privacy and security are concerns for any service in the cloud. As mentioned before, users must be aware that when they choose to use cloud services, their data can be accessed by the cloud provider. This level of accessibility may be undesirable for certain applications and organizations, which wish to keep such information confidential [1]. For example, intellectual property, competitive planning information, and classified military intelligence would not be trusted on remote resources (network, storage, etc.) [2]. As another example, the confidentiality of Swiss bank account information may not be guaranteed by a U.S.-based cloud provider because data privacy depends on the location of the cloud datacenter and the laws and regulations of that country [1].

Although the cloud offers virtually unlimited computing resources, there are concerns in HPC circles that the cloud’s performance is degraded and insufficient compared to traditional computing clusters. First, network limitations in the cloud may lead to lower performance. The network is a vital component of a computing cluster because it enables (or limits) collaboration among geographically-distributed systems. The network determines the distance between the computation and the data; thus, if the bandwidth is low in the cloud, then the processing power is reduced. A second performance concern stems from cloud computing’s inherent hardware architecture virtualization. Abstraction hinders algorithm optimization because algorithms that optimize based on hardware considerations cannot be used. Moreover, the added layer of virtualization slows performance and reduces response times [2].

However, these performance concerns can be countered, at least to some degree. For example, low bandwidth and high latency can be overcome by limiting network communication during computations or using dedicated networks to achieve high performance cloud computing. Additionally, even though virtualization prevents algorithms from hardware-based optimizations, the code can be enhanced in other ways to produce faster results. Well-optimized HPC tools can also be included to improve performance [9].

HPCC Providers (Enablers)

Cloud computing is a big business these days, and many companies have been drawn to the cloud. However, most of the vendors have focused on creating services in the SaaS category. Infrastructure-as-a-Service is more relevant for high performance computing, and this section presents some of the key players in this arena.

By far the most-mentioned IaaS provider is Amazon. Amazon Web Services (AWS) offers a variety of products and services. Aside from the S3, which was previously mentioned, AWS also offers the Elastic Block Store (EBS) as an online storage tool. However, Amazon’s most important product for this discussion of high performance cloud computing is the Elastic Compute Cloud (EC2). According to Amazon’s website, EC2 "is a web service that provides resizable compute capacity in the cloud. It is designed to make web-scale computing easier for developers [10]". EC2 is a large computing infrastructure and service based on hardware virtualization [1]. The service offers bare compute resources on-demand and employs a pay-as-you-go pricing model, in which users only pay for the resources used. EC2 is an attractive alternative to other cloud computing options because of its large user base and mature API [3].

Amazon EC2 allows users to provision compute clusters fairly and quickly, which makes the service very useful for high performance computing [2]. Amazon Machine Images (AMI) are virtual machines in EC2 and are hosted on generic hardware. Public AMIs are available and can be customized to meet a user’s needs [9]. The term "Elasticity" in EC2 refers to the fact that an arbitrary number of AMI instances can be initialized and run in the cloud to match the computational need (scalability) [9]. A virtual computing cluster can be simply constructed by initializing multiple virtual nodes (AMI instances) [3]. Command-line utilities and GUIs, such as Elasticfox (a Mozilla Firefox plugin), are used to initiate, monitor, and terminate the virtual machines [9]. Moreover, Amazon recently introduced a new "cluster instance", which it created specifically for HPC. This cluster instance is an enhancement of its standard virtual computing environment because the cluster comes with a guaranteed, quantitative network speed, which is need for network-intensive applications and computations [6].

Google is involved in so many online activities, that it should come as no surprise that the company also is an enabler of high performance cloud computing. One of their significant contributions is the promotion of MapReduce, a cloud technology that enables parallel programming [2]. MapReduce is especially well-suited for data-intensive applications because it manages large data sets by conceptually moving the computation to the data (instead of the other way around) [1][2]. In part due to the simplicity of its programming model, MapReduce offers a better quality of service than other parallel programming technologies [2].

Hadoop is an open source implementation of MapReduce. It uses a data-centered approach to parallel runtimes by staging data in compute/data nodes of clusters and then moving the computations to the data for data processing. Hadoop hides the operational complexity of running numerous cloud computing servers in parallel [2].

Another provider of high performance cloud computing is Penguin Computing. This company offers an HPC-as-a-Service called Penguin-on-Demand (POD). (HPC-as-a-Service will be discussed later in the report.) POD, which started in August 2009, consists of highly-optimized Linux clusters with specialized hardware and software for HPC. This service is not virtualized like with traditional cloud computing because users have the ability to access a server’s full resources at once to process heavy loads. The POD cluster is configured with a head node that manages several computing nodes. A high-performance network (Infiniband) is also used to increase processing speed. GPU supercomputing (GPGPU was previously discussed) is also available in the POD cluster. This service is built with high performance computing in mind, and POD’s optimizations help it outperform most other cloud providers. In a recent study an application was run on both POD and Amazon EC2, and the POD system was measured to be 32 times faster [2].

Case Studies

How is the cloud being used for high performance computing today? In which fields is it being used, and has it proven to be a viable alternative to traditional computing clusters? To answer these questions, we will take a look at some case studies in which the cloud was used to fulfill an HPC need.

Radiation Therapy Calculations

Treating cancer is sometimes done with radiation therapy. A crucial part of this therapy is calculating the correct radiation dosage. The best way to calculate this dosage is with a Monte Carlo simulation because this approach yields the highest accuracy. However, despite their accurateness, Monte Carlo calculations are rarely performed because they are too resource-intensive to be feasible—the processing takes too long and is too expensive.

To help overcome this challenge a research team from the University of New Mexico in the United States decided to run these Monte Carlo simulations with an on-demand, virtual cluster in the cloud. One very beneficial attribute of Monte Carlo simulations is that they do not require communication between processes; thus, these simulations are good candidates for parallelization because no computational synchronization is needed.

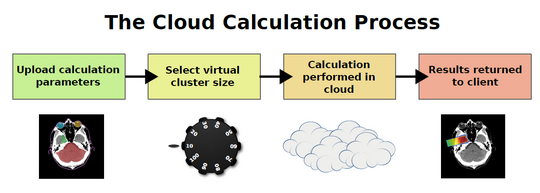

The research team developed the following process to test the viability of high performance cloud computing:

- Upload calculation parameters to the cloud

- Select the virtual cluster size

- Perform calculation in the cloud

- Combine results and return to client

These steps were then implemented with Amazon’s EC2, which was used to create an on-demand, virtual cloud computing cluster. The cluster was configured with a head node, which not only distributed parallelized sub-tasks to all of the computing nodes, but also aggregated the results and returned them to the user. At the start of the processing, each of the computing nodes was initialized with (received) an input file and a unique, random seed for the Monte Carlo simulation.

Results of the computational experiment were very positive. Although the virtual cluster was not able to achieve a 1/n speed-up (where n is the number of nodes in the computing cluster), this was partly expected because of the overhead caused by the serial node initialization. Moreover, this performance limit is described by Amdahl’s Law applied to parallel processing. However, the research team was pleased with the overall cloud solution because it yielded satisfactory performance at lower cost. They concluded that the cloud was a promising approach for high performance scientific computing [3].

HPC-as-a-Service

In addition to the traditional cloud services (i.e. IaaS, PaaS, SaaS), vendors have also started to offer HPC-as-a-Service. As mentioned before, Penguin Computing now has a solution called Penguin-on-Demand (POD), and a similar service called Gompute has been designed for technical and scientific computing. These solutions offer expertise in setting up and running HPC over the Internet, and they feature optimizations that improve computing performance (when compared to other cloud computing providers, such as Amazon EC2). In fact, HPC-as-a-Service strives to achieve the same performance characteristics as traditional (in-house) computing clusters. Although HPC-as-a-Service is concentrated and non-virtualized, it shares the other attributes of cloud computing: scalable clusters, on-demand access, and high-performance computing resources [2].

X-Ray Spectroscopy

In the area of physics, cloud computing has been used to aid in X-ray spectroscopy. A widely-used code called FEFF calculates both X-ray spectra and the electronic structure of complex systems in real-space on large clusters of atoms. The challenging part of this code is that it utilizes complex and processing-intensive algorithms—in the past only users with access to traditional computing clusters have been able to execute this code. However, a team from the University of Washington in the United States discovered that high performance cloud computing is a feasible alternative, which makes the FEFF code accessible to anyone.

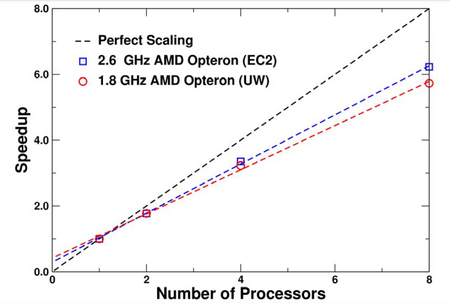

The research team conducted two separate tests. In the first test, they ran a serial version of the FEFF code in the cloud (on Amazon EC2). Thus, there was no parallelization, and networking delays were not an issue. The computation on EC2 performed well, and results from the cloud were comparable to that of a local system. In the second test, the research team tested a parallel version of the FEFF code in the cloud. (Independent calculations make FEFF9 (version 9) code ideal for parallel computing [6].) Once again the team ported the code onto Amazon EC2 and observed similar results: the performance of the virtual cloud-based cluster was similar to a "conventional physical cluster".

Thus, the cloud solution not only yielded good performance, but the research team was also able to obtain results without the cost and complexity of setting up an in-house computing cluster [9].

Conclusion

The use of the cloud for high performance computing has a promising future. Although computation in the cloud will not be able to fully replace dedicated systems for mission-critical applications (see the "HPCC Concerns" section), it will be able to make HPC accessible to anyone [2]. Due to its unique pay-as-you-go pricing model, cloud computing makes HPC economically feasible. The overall flexibility of cloud computing—not only in terms of cost, but also in terms of planning and hardware maintenance and configuration—is a major advantage of this technology. Cloud computing is an enabler and opens the doors to possibilities that would otherwise be unthinkable.

References

- Vecchiola, Christian; Pandey, Suraj; Buyya, Rajkumar (2009). "High-Performance Cloud Computing: A View of Scientific Applications". arXiv:0910.1979v1 [cs.DC].

- Xiaotao, Ye; Aili, Lv; Lin, Zhao (2010). "Research of High Performance Computing With Clouds". Proceedings of the Third International Symposium on Computer Science and Computational Technology (ISCSCT ’10), 289-293. Retrieved 3 January 2012.

- Keyes, Roy W.; Romano, Christian; Arnold, Dorian; Luan, Shuang (2010). "Radiation therapy calculations using an on-demand virtual cluster via cloud computing". arXiv:1009.5282v1 [physics.med-ph].

- Sobie, R.J.; Agarwal, A.; Anderson, M.; Armstrong, P.; Fransham, K.; Gable, I.; Harris, D.; Leavett-Brown, C.; Paterson, M.; Penfold-Brown, D.; Vliet, M.; Charbonneau, A.; Impey, R.; Podaima, W. (2011). "Data Intensive High Energy Physics Analysis in a Distributed Cloud". arXiv:1101.0357v1 [cs.DC].

- Armbrust, Michael; Fox, Armando; Griffith, Rean; Joseph, Anthony D.; Katz, Randy; Konwinski, Andy; Lee, Gunho; Patterson, David; Rabkin, Ariel; Stoica, Ion; Zaharia, Matei (2010). "A View of Cloud Computing". Communications of the ACM, 53(4): 50-58. Retrieved 4 January 2012.

- Jorissen, Kevin; Vila, Fernando D.; Rehr, John R. (2011). "A high performance scientific cloud computing environment for materials simulations". arXiv:1110.0543v1 [physics.comp-ph].

- Franklin, Jeffrey (2010). "Benefits and Limits of Cloud Computing". AIA New Technologies, Alliances, Practices (NTAP) Conference, 2010. Retrieved 3 January 2012.

- "Cloud Computing". Wikipedia.org. Retrieved 9 January 2012.

- Rehr, J.J.; Gardner, J.P.; Prange, M.; Svec, L.; Vila, F. (2008). "Scientific Computing in the Cloud". arXiv:0901.0029v1 [cond-mat.mtrl-sci].

- "Amazon Elastic Compute Cloud (Amazon EC2)". Amazon.com. Retrieved 10 January 2012.

External Links

Below are the web links used in this report (in alphabetical order):

- Amazon

- Amazon Web Services

- Amdahl’s Law

- Elastic Block Store

- Elastic Compute Cloud

- Elasticfox

- GMail

- Gompute

- Google AppEngine

- GoogleDocs

- Hadoop

- MapReduce

- Microsoft Azure

- Monte Carlo

- Penguin Computing

- Penguin-on-Demand

- Rackspace

- Salesforce.com

- Simple Storage Service

- X-ray spectroscopy

- Yahoo!Mail